What Is a Data Model?

Written by Sandra Suszterova |

Table of Contents

- What Is a Data Model?

- Why Create a Data Model?

- What Are the Key Data Model Components?

- Types of Data Models

- What Is Data Modeling?

- How To Build a Logical Data Model

- How To Build a Physical Data Model

- Examples of Data Modeling

- Big Data Modeling

- Data Model Use Cases

- Common Mistakes in Creating Data Models

- Data Modeling Best Practices

- Ready To Get Started With Data Models?

What Is a Data Model?

Before we explore data models in detail, we need to be clear on what data is. Essentially, data is made up of facts, figures, and statistics that are collected, stored, analyzed, and used for making future decisions. In today's digital world, we are constantly generating huge amounts of this data – and if it is to have any function it needs to be processed in an effective way. This is where a data model comes in.

A data model refers to an abstract representation of data structures that are used to organize and manage data in a database or information system. It defines the relationship between the data elements (representing real-world objects) and how they are organized, stored, and retrieved. It can be helpful to imagine a building plan beneath the data model, upon which the entire system will be built.

Data models are used to create databases and data warehouses, manage data for analytical processing, and implement applications that enable users to access information in meaningful ways.

Why Create a Data Model?

A data model is crucial for building business intelligence (BI) solutions that empower users to make data-driven decisions and identify new business opportunities. Data models are the pillars of a system and database; they not only store user data but help ensure this data is accurate and consistent by defining how different data are related in visual forms. This allows users to easily understand how the data model objects are related to each other.

During the creation of a data model, data structures and standards are defined. Using the same term for each object eases data mapping and its consistency throughout the organization. This improves the collaboration between business and technical teams, facilitating better communication and understanding.

A data model ensures that applications are of high quality and efficient for end users, reducing the risk of crashes and making maintenance easier. By partially testing data models during development, bugs, and issues can be detected early, resulting in decreased costs and minimizing the risk of end users experiencing downtime or outages.

What Are the Key Data Model Components?

A data model contains some key components; when creating a data model you will bump into the following terms:

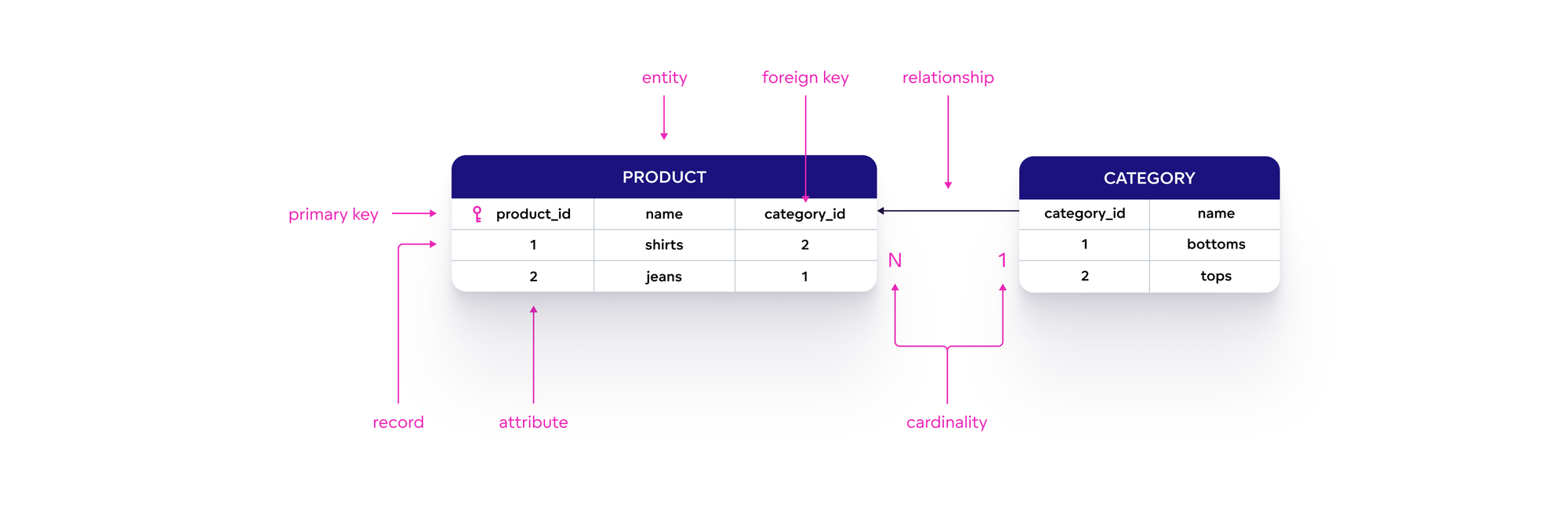

- Entities are the objects we want to represent in our data model and are usually represented by a table. Examples of entities include customers, orders, products, or categories.

- Attributes appear as columns in specific tables. Examples include product identifier and product name (for the Product) category identifier and category name (for the Category).

- Records are shown in rows in each table. A good example of a record is the data of a specific product (identifier, product name, category) stored in a table called Products.

- Relationships define the associations between entities. This may be 1:1, 1:N or M:N. For example, one product could be related to another by a specific product category.

- Cardinality refers to the numerical relationship (1:1, 1:N and N:M) between two entities in a database. For example, the relationship between a specific product and category can be described with a 1:N relationship — one category can be relevant for multiple products, but one specific product is related by a specific category.

- Primary key is a unique identifier of each record in a current table. It refers to a column or a combination of columns that has a unique value for each row. For the Product table the primary key is product_id, and for the Category table it is category_id.

- Foreign key is a column or a group of columns that points towards a primary key in a different table — product_id and category_id. It creates an association between two tables (Product and Category) that enables information from one table (Product) to be linked and related to information from another (Category).

Once the individual components of a data model have been defined, the next step is to assemble them into a comprehensive data model. As you’ll learn below, various types of data models are typically created before the final model.

Types of Data Models

In data modeling, you will likely come across three main types of data models: conceptual, logical, and physical. Each has a different purpose and is used in a different stage of data modeling:

- A conceptual data model is usually created as a blueprint for a future database system. Developed by gathering input from business stakeholders, it provides a high-level overview of the entire system and defines its components and rules in business terms.

- A logical data model provides more detail about the entire system – its entities, attributes, and relationships. It is used to develop a database schema through the collaboration of data architects and business analysts.

- A physical data model is the most detailed representation of the system and contains all of the physical structures for the technical implementation of the database. This model is created by database developers and includes information about data types, indexing, and access methods.

Logical and physical data models are the most widely used data model types. What is the main difference between logical and physical models? In short, the logical data model is an abstraction of the physical data model: it reflects the business point of view and business demands of the entire system. The physical data model, on the other hand, captures all of the implemented tables and views in the current database and includes data types and cardinality. It is also filled with data, which users can view via SELECT query or manage with INSERT, UPDATE, or DELETE.

Sometimes a logical data model is referred to as a semantic data model. A semantic data model focuses on the content and context of the data. All components of the data model are translated into business-friendly terms that facilitate the overall understanding of the data model.

Now we understand the different types of data models, we’re almost ready to discuss how to build them, but first, we need to be sure we understand what data modeling actually means.

What Is Data Modeling?

Data modeling is the process of creating data models to organize and structure data in a way that makes it easier to understand, manage, and use. It can be used to develop a blueprint for a database, information system, or software application.

In data modeling, three types of data models are created: conceptual, logical, and physical. These data models are created in sequence, with each model building upon the previous one.

In data modeling, the first step is to create a conceptual data model. Although there are no set rules to follow, it is a good idea to identify the entities that will be included in the model and define their relationships.

Next, we can create the logical data model. This model helps to identify and gather all the requirements for the entire system, as well as understand how data flows within business processes.

Finally, the logical data model is transformed into a physical data model that specifies the technical implementation details. The physical data model can then be implemented in a database management system, ready to store data and support queries.

How To Build a Logical Data Model

Building a logical data model involves taking the high-level concepts and relationships from the conceptual data model and refining these to create a more detailed representation of the data.

The steps required to build a logical data model include:

- Identifying all attributes for each entity.

- Choosing a primary key for each entity.

- Finding the relationships between said entities.

- Resolving many-to-many relationships.

- Normalizing the data model.

There are several ways to build a logical data model, depending on which tool you use. The three most common are:

- Drag-and-drop: Many analytics platforms have a user-friendly interface, where you can drag-and-drop to create tables and add attributes. You can also set primary keys and create relationships between the tables.

- API: An Application Programming Interface (API) is used to programmatically create, modify, or query the data model. This can help you to automate the data modeling process or integrate it with other software tools.

- Python SDK: Use a Python SDK to build a logical data model and you may have access to libraries and functions that allow you to create tables, define attributes, set primary keys, and establish relationships between tables.

The GoodData analytics platform supports the building of a logical data model using any of the three methods above. However, it also allows you to generate a logical data model automatically by connecting to a specific data source. The platform then identifies all the components of the data model, enabling users to select and add the tables they need. This can save time and effort in the data modeling process, especially when it comes to large and complex data sources.

What Is Logical Normalization?

Logical normalization is the process of organizing the data in a logical data model to minimize redundancy and improve data consistency. Normalization involves breaking down entities into smaller, more atomic components, and capturing them in separate tables.

The normalization process can help to:

- Eliminate data duplication and inconsistency.

- Ensure attributes belong to the correct tables.

- Support easy maintenance and querying of the data model.

- Optimize data structure stability.

- Improve flexibility in the current data model.

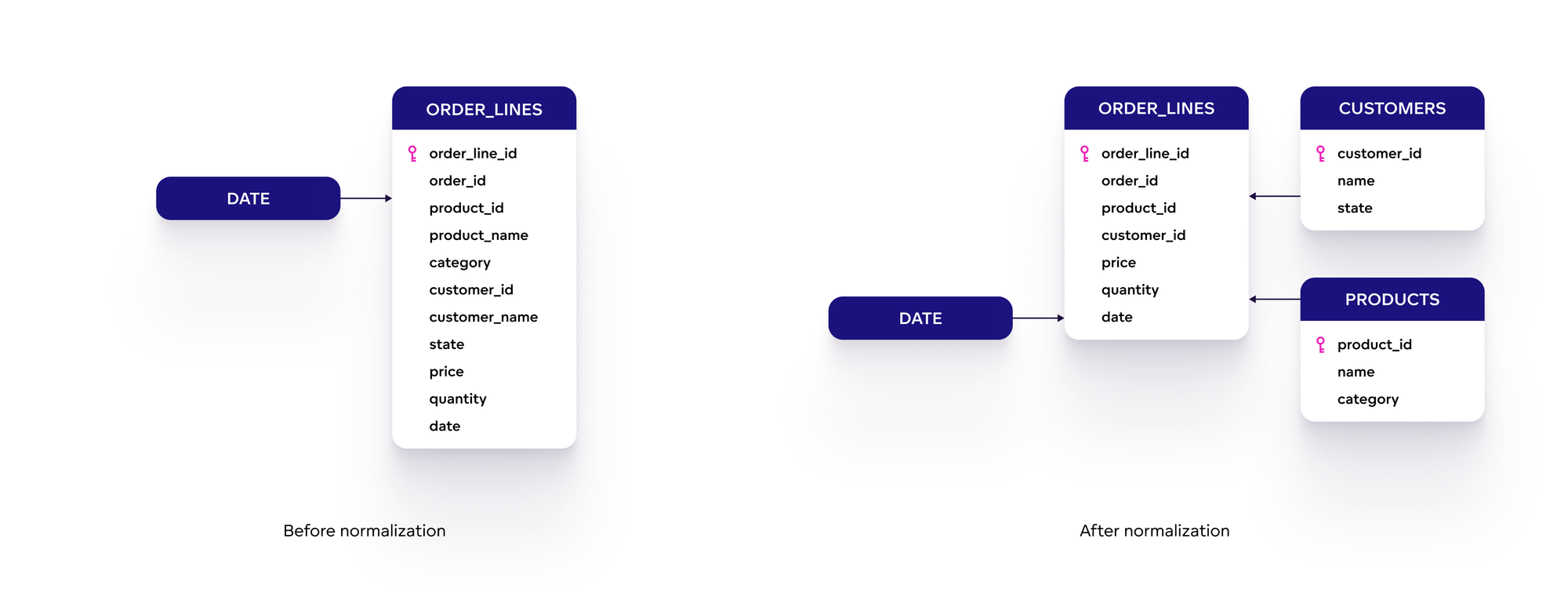

The diagram below shows a data model before and after normalization.

Notice that before normalization the customer data and product information are also stored in the Order Lines table, whereas in the normalized data model, the customer data is in the Customer table and product data is stored in the Product table.

Logical Data Models and the Semantic Layer

A logical data model is a part of the semantic layer, which acts as an abstraction layer between the physical data source and the end user.

Why is the semantic layer so important? It provides a simplified and user-friendly view of the data, making it easier for non-technical users to access and analyze the data with self-service analytics tools. It involves mapping the physical data source, defining metrics and calculations, and establishing data governance rules to reduce the complexity of the data sources. The logical data model plays a crucial role in achieving this goal by enabling users to define and organize the necessary data elements in a way that meets their business needs.

Why not try our 30-day free trial?

Fully managed, API-first analytics platform. Get instant access — no installation or credit card required.

Get startedHow To Build a Physical Data Model

Building a physical data model involves translating the logical data model into a design that can be implemented in a database management system. It focuses on converting entities into tables, attributes into columns, relationships into foreign keys (foreign keys for each table that references another table's primary key), and defining data types for each column.

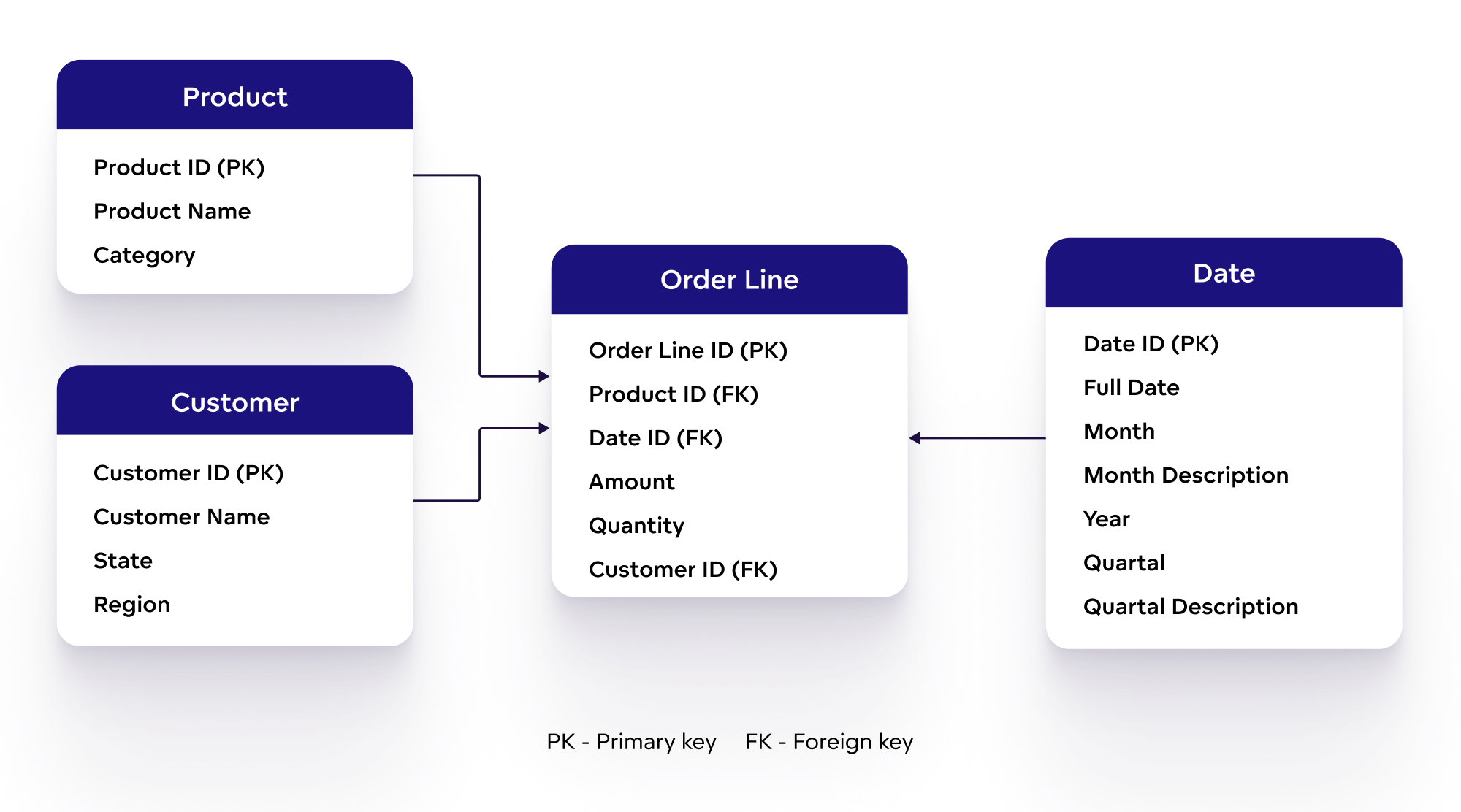

Below is an example of the final version of a logical data model, ready to be transformed into a physical data model:

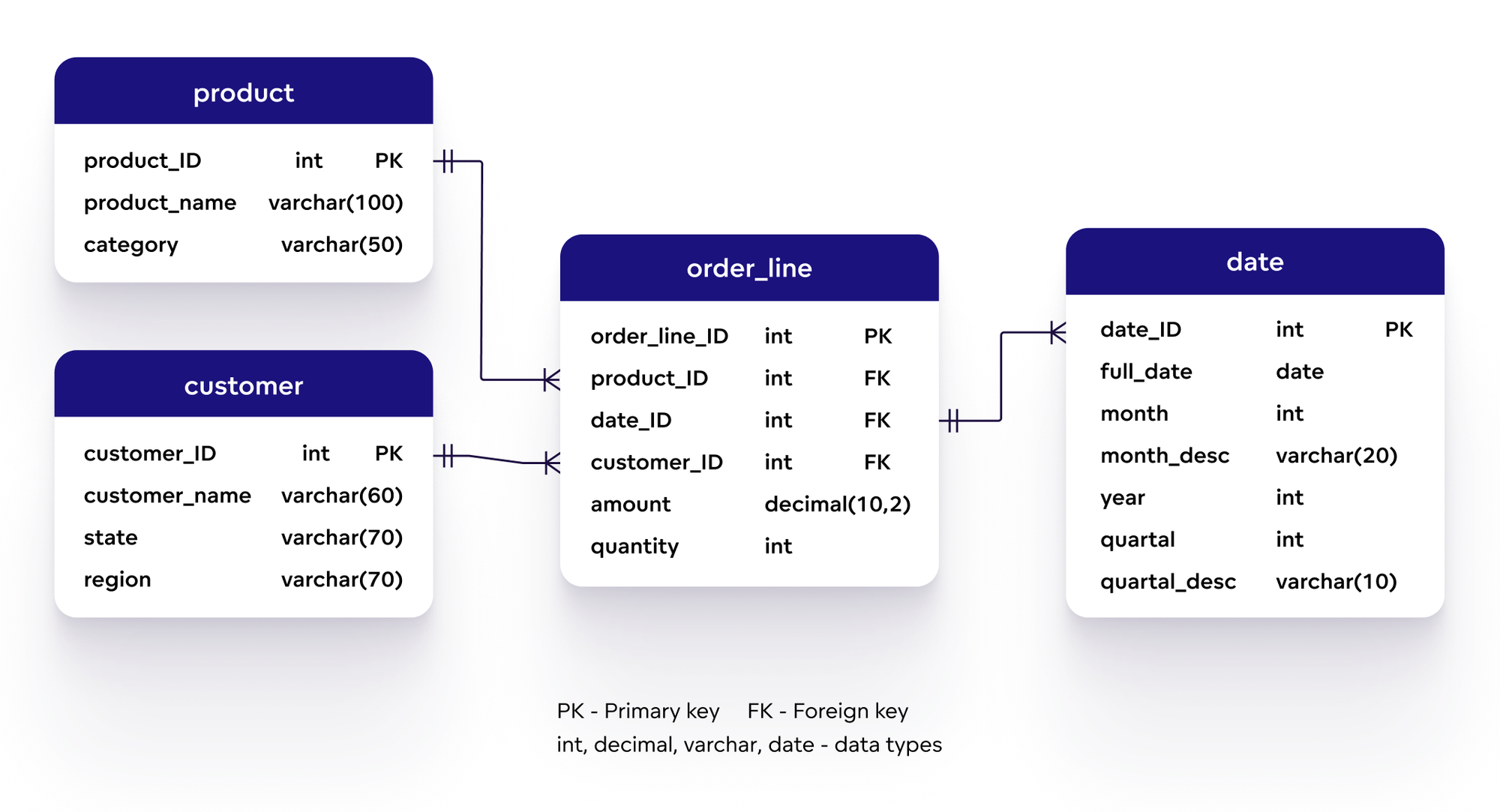

The resulting physical data model has the following shape (it already includes defined relationships and data types):

Once the structure of the physical data model has been created, it is ready to be populated with specific data.

Examples of Data Modeling

Once the data modeling process is complete, the output can be one of several different kinds of data models. The examples below show how the data modeling process and the resulting models emphasize crucial data and how this is organized:

- A hierarchical data model is based on one-to-many relationships in a treelike form. It describes a parent-child relationship, where each record has a single root, also called a ‘parent’, that corresponds to one or more child tables. Currently, this is a less-used model.

- An entity-relationship (ER) data model uses diagrams to represent the relationships between entities within a database.

- Relational data models can be connected to ER data models and entities are described in the form of two-column tables.

- A dimensional data model presents entities in three-dimensional tables. Another version of this model is the multidimensional data model, where each table consists of more than three columns.

Relational and dimensional data models are among the most used.

A relational data model is often used to record current data, such as clients of a given bank, or a list of patients booked for a medical visit online.

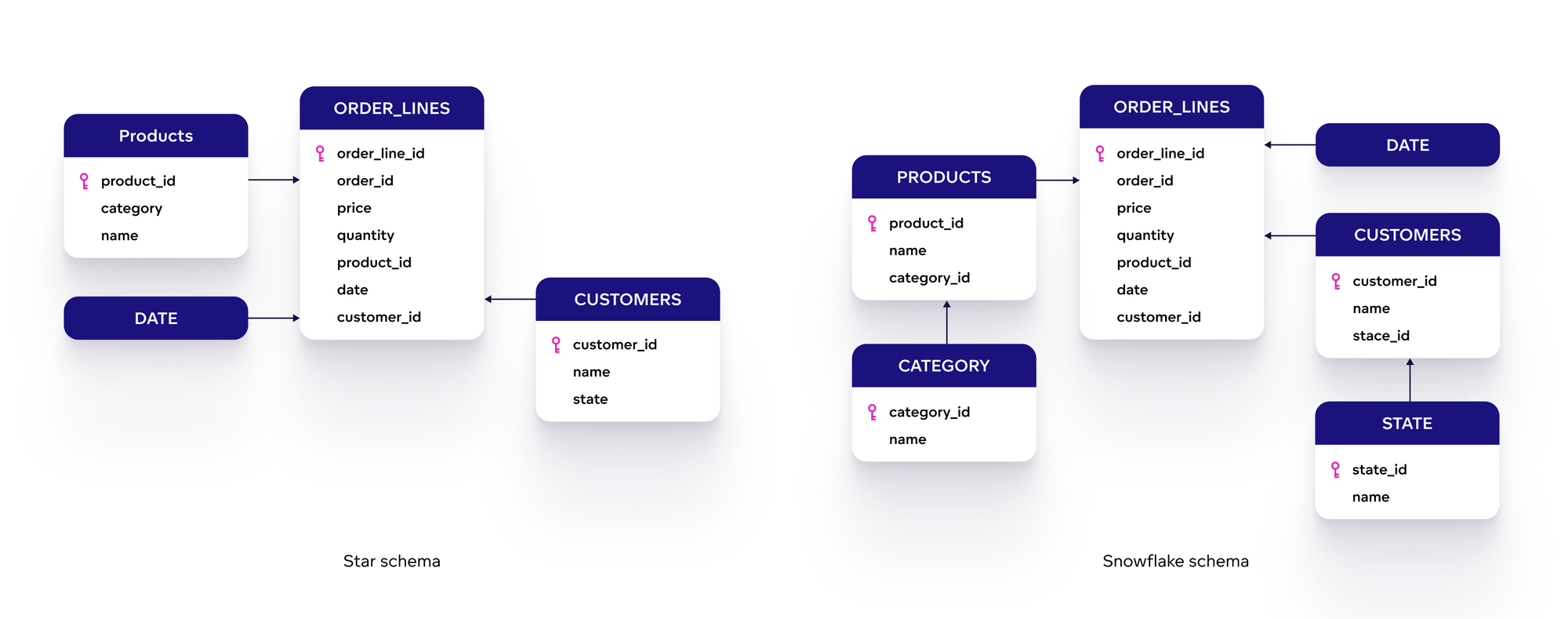

A dimensional data model stores historical data, enabling users to analyze this and access meaningful information for better decision-making. The dimensional data model contains fact tables to store measures (number of sales, total price) and dimension tables to describe facts in more detail (customer location, product category, order date). These can be organized into star or snowflake schema, depending on how the dimension tables are linked to the fact tables.

In a star schema, the fact table is at the center, and the dimension tables are directly connected to it. In a snowflake schema, the dimension tables are normalized, and some of the attributes are stored in separate tables, creating a more complex structure.

Big Data Modeling

Big data refers to large and complex datasets that cannot be easily processed, managed, or analyzed using traditional data processing tools and methods.

Big data is currently used for various purposes, such as:

- Improving customer experience

- Optimizing business processes

- Enhancing decision-making

- Supporting scientific research

Big data modeling is the process of designing the structure and relationships for the data used in a big data project. There are four ways to ensure successful data modeling in big data projects:

- Simple and easy data connection: Connecting data to an analytics platform can be time-consuming and complex. Automated connection makes the process smoother, allowing data engineers to focus on analyzing data rather than connecting to it.

- Alignment between data-related roles: Data engineers and data analysts often face challenges in working together to create a data model that is easy for business users to understand. The aim is to simplify the process of updating data models and improve collaboration with data engineers, which ultimately leads to faster delivery of the product.

- Automating data distribution and change management: This is necessary to efficiently distribute relevant data to each user and enable them to create customized reports. The process eliminates the need to set up changes separately for each user, as changes to the user interface can be easily pushed to all users through automated distribution.

- Derive insights from data: On its own, big data does not have any benefit; it only becomes useful when we use it to create metrics and visualizations to gain information for future decision-making.

Data Model Use Cases

Data models are widely used to effectively manage and analyze data. Regardless of the size of a company or its data volume, data models are at the core of analytics, allowing a company to track its current situation and make data-driven decisions.

Data models are used in e-commerce to manage and analyze customer data, sales data, brands, and suppliers. They help to track current customer requirements, identify trends, predict demand, and optimize inventory levels.

Another data model use case is providing analytics for financial services. A financial service data model helps banks to evaluate and improve the financial health of consumers, monitor success, and provide data support for advisors and investors. It also helps to prevent fraud by using data-driven rules and decision-making.

Software companies need data models to incorporate analytics into their products. Once the data is stored in the data model, it is connected to an analytics platform and can be integrated with other applications. With embedded analytics, users can easily embed visualizations into their custom applications without the need for a separate analytics interface.

Common Mistakes in Creating Data Models

When building a data model, data architects and database designers often make the same mistakes. You can find more details about the most common errors here, but for now, here’s a quick rundown:

- Failing to consider the flexibility of the data model and its adaptability to the changing business environment.

- Building tables that are too large and complex to understand, which then require simplifying into separate tables.

- Choosing the wrong data model schema and being unable to store relevant data.

- Neglecting end users and their needs.

- Not tracking changes in the data over time, leading to duplications.

- Mixing data granularity when setting up primary keys for each table.

- Using poor naming conventions and forgetting to document the data dictionary.

- Creating too many (or too complex) views.

- Failing to recognize data modeling as an ongoing process.

- Poor communication between stakeholders, leading to suboptimal data design.

Data Modeling Best Practices

To achieve a well-designed and effective data model, it can be helpful to follow these best practices during the data modeling process:

- Start with a clear understanding of the business requirements. Before you begin to create data for the model, have a clear understanding of the requirements you are trying to fulfill. This will ensure that your data model accurately represents the needs of the business.

- Visualize the data to be modeled. Visual representations of your data are more effective than alphanumeric data tables in identifying anomalies, cleaning data, and transforming different data types into common formats for easy integration with other data sources.

- Keep it simple. Data models need to be easy to read and understand. To avoid confusing your users, use simple structures and avoid unnecessary details. Defining business questions with facts, dimensions, filters, and orders can help you to analyze data more efficiently and provide answers to specific queries.

- Only use the relevant data. When working with large datasets, only using the necessary data to answer business questions can prevent memory and speed issues.

- Verify and validate each stage of the data modeling before you proceed. Before continuing to the next step, check each action (such as selecting a primary key for a dataset) based on the business requirements. To avoid complex or unmanageable data models, also verify that each record can be uniquely identified in the dataset.

- Document your model. This can provide context and make it easier for others to understand, For example, you can include explanations of the entities and relationships, business rules, and any assumptions made during data modeling.

- Collaborate with stakeholders. To ensure that your data model aligns with the overall business strategy, it is important to collaborate with stakeholders such as business analysts and data architects.

Ready To Get Started With Data Models?

Want to gain first-hand experience with data models? Start a free GoodData trial and create your first logical data model, or request a demo and we'll guide you through the process. Alternatively, learn more about data models with the following resources:

Deliver Efficient Data Consumption With a Semantic Model

Why not try our 30-day free trial?

Fully managed, API-first analytics platform. Get instant access — no installation or credit card required.

Get startedWritten by Sandra Suszterova |