Flexible Caching and Storage with Flight RPC

Written by Dan Homola |

This is part of a series about FlexQuery and the Longbow engine powering it.

Storing any kind of large data is tricky. So when we designed our Longbow engine, for our Analytics Stack, we did so with flexible caching and storage in mind.

Longbow is our framework based on Apache Arrow and Arrow Flight RPC, for creating modular, scalable, and extensible data services. It is essentially the heart of our Analytics Stack ( FlexQuery). For a more bird’s eye view of Longbow and to understand its place within FlexQuery, see the architectural and introductory articles in this series.

Now let’s have a look at the tiered storage service for the Flight RPC in Longbow, its trade-offs and our strategies to tackle them.

If you want to learn more about the Flight RPC I highly recommend the Arrow Flight RPC 101 article written by Lubomir Slivka.

The storage trade-offs

To set the stage for the Longbow-specific part of the article, let us briefly describe the problems we tackled and some basic motivations. When storing data, we need to be mindful of several key aspects:

- Capacity

- Performance

- Durability

- Cost

Setting them up and balancing them in cases when they go against one another is a matter of carefully defining the requirements of the particular application and can be quite situation-specific.

Cost v. Performance

The first balancing decision is how much we are willing to spend on increased performance. For example, when storing a piece of data being accessed by many users in some highly visible place in our product, we might favor better performance for a higher cost because the end-user experience is worth it. Conversely, when storing a piece of data that has not been accessed for a while, we might be ok with it being on a slower (and cheaper) storage even at the potential cost of it taking longer to be ready when accessed again. We can also be a bit smarter here and make it so that only the first access will be slower (more on that later).

Capacity v. Performance

Another balancing decision is about where we draw the line between data sizes that are too big. For example, we might want to put as many pieces of data into memory as possible: memory will likely be the best-performing storage available. At the same time though, memory tends to be limited and expensive, especially if you work with large data sets or very many of them: you cannot fit everything into memory and “clog” the memory with one huge flight instead of using it for thousands of smaller ones would be unwise.

Durability

We also need to decide on how durable the storage needs to be. Some data changes rarely but is very expensive to compute, it might even be impossible to compute again (e.g. a user-provided CSV file): such data might be a good candidate for durable storage. Other types of data can be changing very often and/or be relatively cheap to compute: non-durable storage might be better there. Also, there can be other non-technical circumstances: compliance or legal requirements might force you to avoid any kind of durable storage altogether.

Isolation and multi-tenancy

Yet another aspect to consider is whether we want to isolate some data from the rest or handle it differently, especially in a multi-tenant environment. You might want to give a particular tenant a higher capacity of storage space because they are on some advanced tier of your product. Or you might want to make sure some of the data is automatically removed after a longer period of time for some tenants. The storage solution should give you mechanisms to address these requirements. It is also a monetization leverage - yes, even we have to eat something. ;-)

Storage in Longbow

When designing and developing Longbow, we aimed to make it as universally usable as possible. As we have shown in the previous section, flexibility in the storage configuration is paramount to making a Longbow deployment efficient and cost-effective.

Since Longbow builds on the Flight RPC protocol (we go into much more detail in the Project Longbow article), it stores the individual pieces of data (a.k.a flights) under flight paths. Flight paths are unique identifiers of the related flights. They can be structured to convey some semantic data by having several segments separated by a separator (in our case it is a slash).

In this context, you can think of a flight as a file on a filesystem and a flight path as a file path to it: like it, it is a slash-separated string like cache/raw/postgres/report123.

The flight paths can be used by the Flight RPC commands to reference the particular flights. Flight RPC, however, does not impose any constraints on how exactly the actual flight data should be stored: as long as the data is made available when requested by a Flight RPC command, it does not care where it comes from. We take advantage of this fact and we make use of several types of storage to provide the data to Flight RPC.

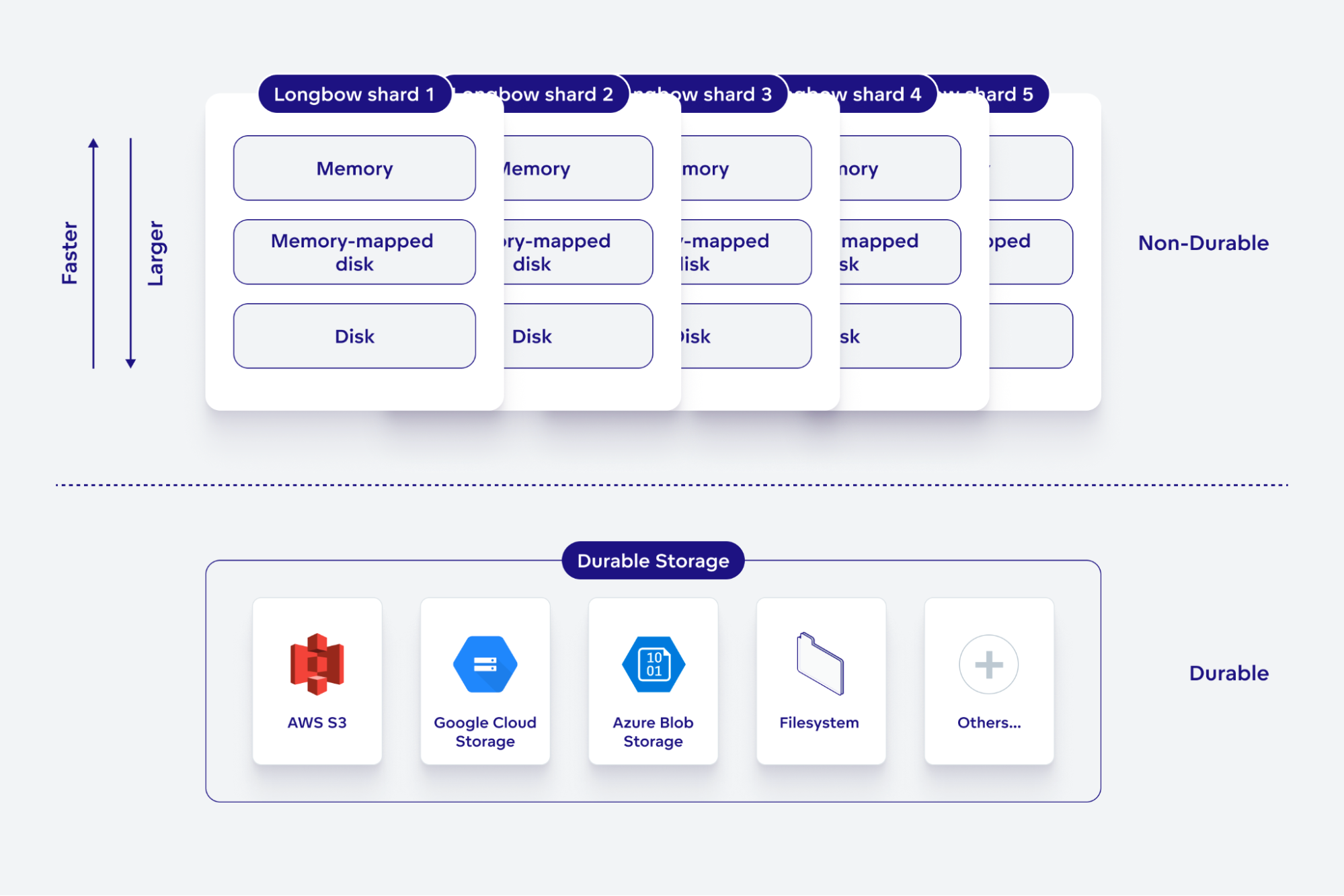

The storage types in Longbow are divided into these categories:

Shard - local, ephemeral storage

- Memory

- Memory-mapped disk

- Disk

External, persistent storage

As you can see, the lower in the storage hierarchy we go, the slower, larger, and cheaper the storage gets, and vice versa. Also, only the external durable storage survives Longbow shard restarts. To expose these layers and to make them configurable, we built an abstraction on top of them that we call Storage classes.

Storage classes

A storage class encapsulates all the storage-related configurations related to a subset of flights. It has several important properties:

- It can be applied only to some flights: you can have different storage classes for different types of flights.

- The settings in the storage class can be tiered: you can mix and match different types of storage and flights can be moved between them.

Longbow uses the storage class definitions to decide where to physically store an incoming flight and how to manage it.

Storage class settings

Storage classes have different settings that define the storage class as a whole, another set of settings that define different so-called cache tiers, and another set that can govern the limits of several storage classes. Let’s focus on the storage-class-wide ones first.

Flight path prefix

Each storage class is applied to a subset of the flight paths. More specifically, each storage class defines a flight path prefix: only flights that share that flight path prefix are affected by that particular storage class. This allows us to handle different types of data differently by constructing their flight paths systematically thus handling the tradeoffs described earlier differently for data with different business meanings.

The storage classes can have flight path prefixes that are substrings of each other: in case of multiple prefixes matching the given flight path, the storage class with the longest matching prefix is used.

Going back to the filesystem path analogy, you can think of the path prefix as a path to the folder you want to address with the particular settings. Folders deeper in the tree can have their own configuration, overriding that of the parent folders.

Durability

Storage classes must specify the level of storage durability they guarantee. Currently, we support three levels of durability:

none– the storage class does not store the flights in any durable storage. Data will be lost if the Longbow node handling that particular flight runs out of resources and needs to evict some flights to make room for new ones, crashes, or is restartedweak– the storage class will acknowledge the store operation immediately (before storing the data in durable storage). Data can be lost if the Longbow node crashes during the upload to the durable storage. However, the happy-path scenarios can have better performance.strong– the storage class will only acknowledge the store operation after the data has been fully written to the durable storage. Data will never be lost for acknowledged stores, but the performance may suffer waiting for the durable write to complete.

These levels allow us to tune the performance and behavior of different data types. For data we cannot afford to lose (for example direct user uploads), we would choose strong durability, for data that can be easily recalculated in the rare case of a shard crash, weak might provide a better performance overall.

Storage classes with any durability specified will store the incoming data in their ephemeral storage first (if it can fit) and then create a copy in the durable storage. Whenever some flight is evicted from the ephemeral storage, it can still be restored there from the durable storage if it is requested again.

Durable Storage ID and Prefix

Durable storage ID and prefix tell the storage class which durable storage to use and whether or not to put the data there under some root path (so that you can use the same durable storage for multiple storage classes and keep the data organized). This also means you can use different durable storage and in the future, this would also enable some “bring your own storage” types of use cases for your users.

Flight Time-To-Live (TTL)

With flight Time-To-Live (TTL), storage classes can specify how long “their” flights will remain in the system. After that time passes, flights will be made unavailable.

In non-durable storage classes, the flights might become unavailable sooner: if resources are running out, the storage class can evict the flights to make space for newer ones.

In durable storage classes, the TTL is really the time they will be available for. In case the flight is evicted from the ephemeral storage tier (more on that later), it will be restored from the durable storage on next access. After the TTL passes, the flight will also be deleted from the durable storage.

Flight replicas

Optionally, the flight replicas setting directs the storage class to create multiple copies of the flights across different Longbow nodes. This is not meant to be a resilience mechanism, rather, it can be used to improve performance by making the flights available on multiple nodes.

Cache tier settings

As described earlier, each Longbow shard has several layers of ephemeral storage resources and if configured, also external durable storage (e.g. AWS S3 or network-attached storage (NAS)). To utilize each of these layers as efficiently as possible, storage class settings can configure the usage of each of these tiers separately. There are also policies that automatically move data to a slower tier after that piece of data was not accessed for some time and vice versa, moving the data to a faster tier after it has been accessed repeatedly over a short period of time.

The cache tiers each have several configuration options to allow for all of these behaviors.

Storage type

Tiers must specify the type of storage they manage. There are several options:

memory– the data is stored in the memory of the Longbow shard.disk_mapped– the data is stored on the disk available to the Longbow shard and memory mapping is used when accessing the data.disk– the data is stored on the disk available to the Longbow shard. This disk is wiped whenever the shard restarts.

The storage types are shared by all the tiers of all the storage classes in effect (there is one memory after all), so once the storage is running out of space, flights from across the storage classes will be evicted.

For storage classes with no durability, eviction means deleting the flight forever.

For storage classes with durability, eviction deletes the flight copy from the ephemeral storage but keeps the copy in durable storage. This means that if the evicted flight is requested, it can be restored from the durable copy back into the more performant ephemeral storage.

Max flight size and Upload Spill

Each tier can specify the maximum size of flights it can accept. This is to prevent situations when one large flight being uploaded would lead to hundreds or thousands of smaller flights being evicted: it is usually favorable to be able to serve a lot of users efficiently with smaller flights than only a handful with a few large ones. There is also a setting allowing the flights larger than the limit to either be rejected straight away or to spill over to another storage tier (e.g. from memory to the disk instead).

Since the flight data is streamed during the upload, the final size is not always known ahead of time. So the upload starts writing the data to the first tier and only when it exceeds the limit, the already uploaded data is moved to the next tier, and the rest of the upload stream is also redirected there. For cases when the final size is known ahead of time, the client can provide it at the upload start using a specific Flight RPC header, to avoid the potentially wasteful spill process.

Priority and Spill

When a storage type is running out of resources, it might need to start evicting some of the flights to make space for newer ones. By default, the eviction is driven using the least-recently-used (LRU) policy, but for situations where more granular control is needed, the storage tiers can specify a priority: flights from a tier with a higher priority will be evicted only after all the flights with lower priority are gone.

Related to this, there is also a setting that can cause the data to be moved to another storage type instead of being evicted from the ephemeral storage altogether (similarly to the Upload Spill).

Move after and Promote after

Tiers can also specify a time period after which the flights are moved to another (lower) tier. This is to prevent the “last-minute” evictions that would happen when the given storage tier is running out of resources.

Somewhat inverse to the Move after mechanism, tiers can proactively promote a flight to a higher tier after it was accessed a defined number of times over a defined period of time. This handles the situation where a lot of users start accessing the same “stale” flight at the same time: we expect that even more are coming so to improve the access time for them, we move the flight to a faster tier.

Tuning these two settings allows us to strike a good balance between having the flights most likely to be accessed the most in the fastest cache tier while being able to ingest new flights as well.

Limit policies

To have even more control, the configuration also offers something we call Limit policies. These can affect how many resources in total a particular storage class takes up in the system. By default, without any limit policies, all the storage classes share all the resources available. Limit policies allow you to restrain some storage classes to only store data up to a limit. You can limit both the amount of data in the non-durable storage only and the total amount including the durable storage.

For example, you can configure the policies in a way that limits cache for report computations to 1GB of data in the non-durable storage but leaves it unlimited capacity in the durable storage. Or you can also impose a limit on the durable storage (likely to limit costs).

There are several types of limit policies catering to different types of use cases. One limit policy can be applied to several storage classes and more than one limit policy can govern a particular storage class.

Standard limit policies

These are the most basic policies, they set a limit on a storage class as a whole i.e. on all the flight paths sharing the storage class’ flight path prefix. They are useful for setting a “hard” limit on storage classes that you fine-tune using other limit policy types.

Segmented limit policies

Segmented limit policies allow you to set limits on individual flight path subtrees governed by a storage class.

For example, if you have flight paths like cache/postgres and cache/snowflake and a storage class that covers the cache path prefix, you can set up such a policy that limits data from each database to take at most 1GB per database type and it would automatically cover even database types added in the future.

Hierarchical limit policies

Offering even more control than with the segmented limit policies, hierarchical limit policies allow you to model more complex use cases limiting on more than one level of the flight paths.

For example, you have a storage class that manages flights with the cache prefix. On the application level, you make sure that the flight paths for this storage class always include some kind of tenant id as the second part of the flight path, the third part is the identifier of a data source the tenant uses and finally, the fourth segment is the cache itself: cache/tenant1/dataSource1/cacheId1. Hierarchical limit policies allow you to do things like limiting tenant1 to 10GB in total and also allow them to put 6GB towards dataSource1 because they know it produces bigger data while keeping all the other tenants at 5GB by default.

Summary

As we have shown, storing any kind of large data certainly isn’t straightforward. It has many facets that need to be carefully considered. Any kind of data storage system needs to be flexible enough to allow the users to tune it according to their needs.

Longbow comes equipped with a meticulously designed tiered storage system exposed via Flight RPC that enables the users to set it up to cater to whatever use case they might have. It takes advantage of several different storage types and plays to their strengths whether it is size, speed, durability, or cost.

In effect, Longbow’s cache system provides virtually unlimited storage size thanks to the very cheap durable storage solutions while keeping the performance much better for the subset of data that is being actively used.

Want to learn more?

As we mentioned in the introduction, this is part of a series of articles, where we take you on a journey of how we built our new analytics stack on top of Apache Arrow and what we learned about it in the process.

Other parts of the series are about the Building of the Modern Data Service Layer, Project Longbow, details about the Flight RPC, and last but not least, how good the DuckDB quacks with Apache Arrow!

As you can see in the article, we are opening our platform to an external audience. We not only use (and contribute to) state-of-the-art open-source projects, but we also want to allow external developers to deploy their services into our platform. Ultimately we are thinking about open-sourcing the Longbow. Would you be interested in it? Let us know, your opinion matters!

If you’d like to discuss our analytics stack (or anything else), feel free to join our Slack community!

Want to see how well it all works in practice, you can try the GoodData free trial! Or if you’d like to try our new experimental features enabled by this new approach (AI, Machine Learning and much more), feel free to sign up for our Labs Environment.

Written by Dan Homola |