FlexConnect: Integrate Any APIs Into BI

Enhancing your business intelligence (BI) reports with real-time data through APIs can provide valuable insights without manually scraping and storing the data. FlexConnect empowers you to integrate any API directly into your reports (no database needed), streamlining the data enrichment process!

Let me show you an example of how this would look in practice. I absolutely love ice cream, so I chose to do the whole thing for an ice cream shop. Reader discretion is advised, as you might suddenly get the urge to eat some!

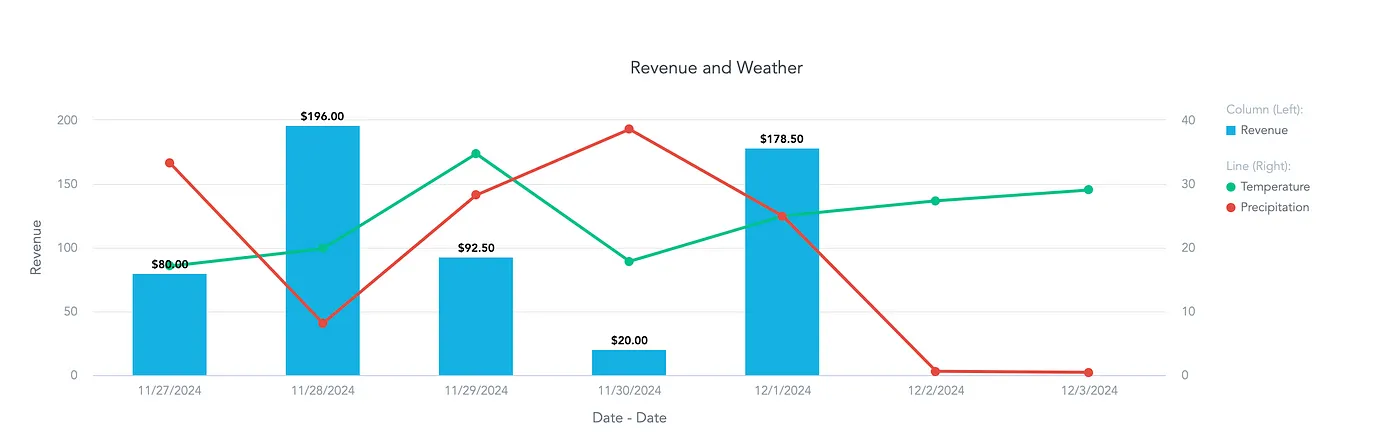

Let’s explore using FlexConnect to incorporate weather data into your BI reports. We’ll focus on how weather conditions — specifically temperature and chance of rain — affect ice cream sales.

We will also cover how to manage filters with FlexConnect to make your reports dynamic and responsive.

This article is part of the FlexConnect Launch Series. To dive deeper into the overall concept, be sure to check out our architectural overview. Don’t miss our other articles on topics such as API ingestion, NoSQL integration, Kafka connectivity, Machine Learning, and Unity Catalog!

Setting Up the Weather API Integration

We’ll use WeatherAPI.com, a reliable and user-friendly service that provides historical and forecasted weather data for specified locations.

Starting with the FlexConnect Template

Luckily, all the heavy lifting has already been done and you can simply use the FlexConnect Template Repository provided by GoodData. This template provides a solid foundation and is easy to customize for your specific needs.

The template includes three main methods crucial for our integration:

__init__: Registers the class as a data source in the Logical Data Model.on_load: This method sets up static data, such as the API key. In machine learning scenarios, it can also load models into memory to optimize performance.call: The core method where data retrieval and processing occur. For our weather API integration, this method will handle date filters, extract the location, and fetch data from the API.

Handling Date Filters

We must handle date filters properly since we’re dealing with historical and forecasted weather data. Users might apply absolute date filters (specific start and end dates) or relative date filters (e.g., “last 2 weeks”). The following function demonstrates how to extract and process these date filters:

def _handle_date(self, context: Optional[ExecutionContext]) -> tuple[str, str]:

"""Extract the from_date and to_date from the execution context filters."""

now = datetime.now()

for date_filter in context.filters:

if isinstance(date_filter, AbsoluteDateFilter):

return date_filter.from_date, date_filter.to_date

elif isinstance(date_filter, RelativeDateFilter):

if date_filter.granularity == "DAY":

from_date = (now + timedelta(days=date_filter.from_shift - 1)).date()

to_date = (now + timedelta(days=date_filter.to_shift)).date()

if date_filter.to_shift == 0:

to_date = now.date()

elif date_filter.granularity == "WEEK":

# Align to the beginning of the from_week and the end of the to_week

current_week_start = now - timedelta(days=now.weekday())

from_date = current_week_start + timedelta(weeks=date_filter.from_shift)

to_date = current_week_start + timedelta(weeks=date_filter.to_shift + 1) - timedelta(seconds=1)

if date_filter.to_shift == 0:

# Align to the end of the current week (Sunday)

to_date = current_week_start + timedelta(days=6, hours=23, minutes=59, seconds=59)

else:

# Handle other granulatiries as well

return from_date.isoformat(), to_date.isoformat()

# Default to last week + 1 day ahead if no date filter is provided

from_date = (now - timedelta(days=7)).date().isoformat()

to_date = (now + timedelta(days=1)).date().isoformat()

return from_date, to_dateThis function checks for absolute and relative date filters and calculates the appropriate from_date and to_date based on the granularity (day or week). If no date filter is provided, it defaults to the last week.

Extracting the Location

Weather data is location-specific, so we must extract the desired city from the execution context filters. If no city is specified, we’ll default to “San Francisco.” Here’s the function that handles this:

def extract_location(self, execution_context: ExecutionContext) -> str:

"""Extract the location from the execution context filters."""

# Default location

location = "San Francisco"

for filter in execution_context.filters:

if (

isinstance(filter, ExecutionContextPositiveAttributeFilter)

and filter.label_identifier == "customer_city"

):

location = filter.values[0]

break # Assuming we take the first matching filter

return locationThis function searches the filters for a positive attribute filter on “customer_city” and extracts the city value. Note that this can easily be more robust, and you could perhaps do multiple calls, if you filter on more cities at once.

Polling the Weather API

With the date range and location determined, we can now retrieve the weather data from the API. We’ll demonstrate how to get historical data; the process for forecast data is similar.

def get_historical_data(self, from_date: str, to_date: str, location: str) -> dict[str, Any]:

"""Retrieve and process historical weather data."""

clamped_end_date = min(datetime.now().date(), datetime.fromisoformat(to_date)).isoformat()

params = {

"key": self.api_key,

"q": location,

"dt": from_date,

"end_dt": clamped_end_date

}

response = requests.get(HISTORICAL_URL, params=params)

response.raise_for_status()

data = response.json()

output = {

"Date": [],

"Source": [],

"Temperature": [],

"Rain": []

}

for day in data.get("forecast", {}).get("forecastday", []):

for hour in day.get("hour", []):

output["Date"].append(datetime.fromtimestamp(hour["time_epoch"]))

output["Source"].append("Observation")

output["Temperature"].append(hour["temp_c"])

output["Rain"].append(hour["chance_of_rain"])

return outputThis function does three things:

- Makes a GET request to the historical weather API endpoint.

- Processes the JSON response.

- Organizes the data into a dictionary suitable for conversion to a table.

Merging Historical and Forecast Data

After retrieving both historical and forecast data, we merge them and return the combined dataset:

# Merge historical and forecast data

output = {

"Date": historical_data["Date"] + forecast_data["Date"],

"Source": historical_data["Source"] + forecast_data["Source"],

"Temperature": historical_data["Temperature"] + forecast_data["Temperature"],

"Rain": historical_data["Rain"] + forecast_data["Rain"],

}

return pyarrow.from_pydict(output)Combining the data provides a continuous timeline of weather information, which can be invaluable for analyzing trends and making predictions.

Hosting and Deployment

While the integration is straightforward, you must host this function and make it accessible as an API. Refer to the Architecture Overview for guidance on setting up the function as a data source.

Visualizing the Weather Data in BI Reports

There is no Revenue for the next two days, as there are no future data for it.

Filtering by City

If you want to focus on a specific location, such as Atlanta, you can apply filters in GoodData. The extract_location function ensures that the weather data corresponds to the selected city.

Planning Based on Forecasts

By including forecasted weather data, you can make proactive business decisions. For instance, if heavy rain is forecasted in the coming days, you might adjust your ice cream production accordingly to prevent waste.

Unlocking the strength of APIs With FlexConnect

Integrating external APIs into your BI reports using FlexConnect unlocks many possibilities. In this example, we’ve shown how to incorporate weather data to enhance your sales analysis. This approach can be extended to other APIs, enriching your insights and driving better decision-making.

Embrace the power of APIs and make your BI reports more dynamic and informative!

Learn More

FlexConnect is built for developers and companies looking to streamline the integration of diverse data sources into their BI workflows. It gives you the flexibility and control you need to get the job done with ease.

Explore detailed use cases like connecting APIs, running local machine learning models, handling semi-structured NoSQL data, streaming real-time data from Kafka, or integrating with Unity Catalog — each with its own step-by-step guide.

Want the bigger picture? Check out our architecture article on FlexConnect, or connect with us through our Slack community for support and discussion.