FlexConnect: Integrate NoSQL Into BI

Over the past several years, NoSQL databases (and document-oriented databases specifically) have seen significant adoption in fields like IoT and E-Commerce. They provide some interesting advantages over more traditional SQL-based databases, including flexibility, scalability, and ease of use for developers. However, while flexibility is a benefit, it can make it challenging to connect these databases to analytics platforms.

In this article, we show how FlexConnect, a new GoodData feature, can be used to connect a MongoDB collection to GoodData using a little Python code. We demonstrate this using the MongoDB Sample Mflix demo data about movies — so that it is easy to follow. We also take advantage of MongoDB client supporting the Apache Arrow format to achieve much simpler and more efficient integration.

This article is part of the FlexConnect Launch Series. To dive deeper into the overall concept, be sure to check out our architectural overview. Don’t miss our other articles on topics such as API ingestion, NoSQL integration, Kafka connectivity, Machine Learning, and Unity Catalog!

Challenges

Unlike most SQL-based databases, document databases can store data with complex structures, including nested objects, lists, and more. The schema of the documents is also not as rigid as in the SQL world. These factors make it quite challenging to fit the data into a Star- or Snowflake schema, commonly used in BI to provide self-service capabilities to end users. Any solution that aims to connect those two worlds needs to be flexible enough to allow for customization of the mapping logic. This is essential to cope with the dynamic and loosely-structured nature of the documents.

What Is FlexConnect?

FlexConnect is a new way of providing data to be used in GoodData. It enables connections to virtually any data source while integrating with the rest of GoodData, just like any natively supported data source type.

I like to think of it as “code as a data source,” because that is essentially what it does: it allows the use of arbitrary code to generate data and act as a data source in GoodData. The contract it needs to implement is quite simple: based on the execution definition, return the relevant data as an Apache Arrow Table over the Arrow Flight RPC protocol (I highly recommend our detailed primer on Arrow Flight RPC). FlexConnect is then deployed anywhere you like — as long as GoodData can reach it and it acts as “just another data source.” This article goes into much more detail, so I highly recommend reading it next. For the purpose of this article, we will focus on the code part of FlexConnect, glossing over the infrastructure side of things.

FlexConnect for Movie Collection

To showcase FlexConnect’s capabilities, we will use MongoDB, a popular choice among document-oriented databases, as a representative. We will use their Sample Mflix dataset to create a simple FlexConnect implementation exposing some of the data to GoodData.

To get started, we clone the gooddata-flexconnect-template repository. This bootstraps the necessary boilerplate we need to get started. It is a production-ready setup based on our gooddata-flight-server package that handles the necessary infrastructure for exposing data using the Apache Arrow Flight RPC protocol, the basis of FlexConnect.

Dependencies

Once that is done, we can start implementing the MongoDB connector. MongoDB provides a Python client for easy interaction with the databases. They also provide a plugin of sorts that adds some Apache Arrow-friendly APIs. We will be using both, so we need to add those to our project:

- pymongo - the base client

- pymongoarrow -the Apache Arrow “plugin”

Schemas

Next, let’s define the data schema we will expose to GoodData and the schema of the data we will pull from MongoDB. Thanks to pymongoarrow, we can define both at once!

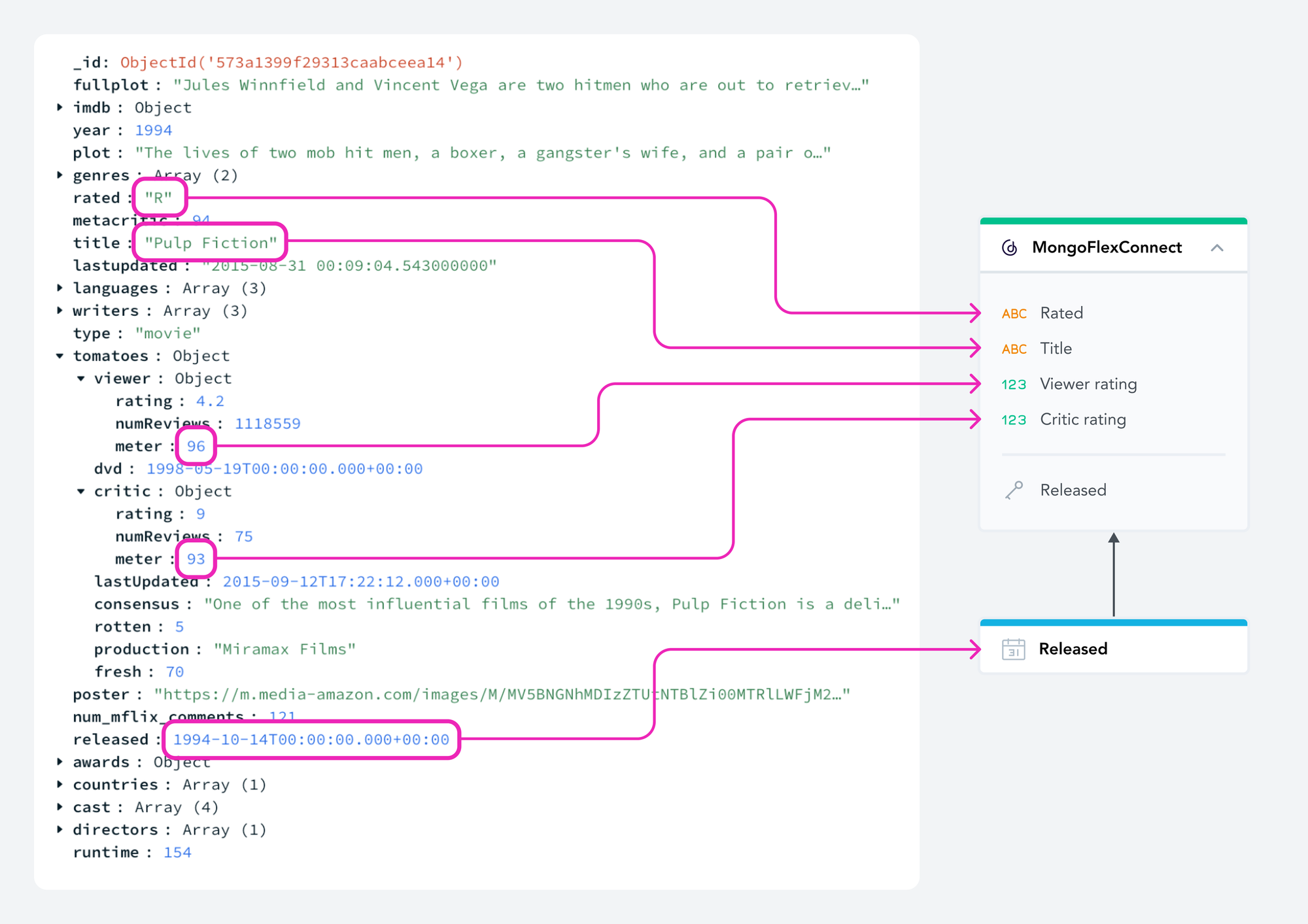

Schema of the mapping of the JSON on GoodData table

import pyarrow

from pymongoarrow.api import Schema as MongoSchema

# This is the schema the data returned by the MongoDB query will have.

DbSchema = MongoSchema(

{

"title": pyarrow.string(),

"rated": pyarrow.string(),

"released": pyarrow.timestamp("ms"),

"critic_rating": pyarrow.int64(),

"viewer_rating": pyarrow.int64(),

}

)

# We need to advertise the schema of the data we are going to send to GoodData.

# This is part of the FlexConnect function contract.

Schema = DbSchema.to_arrow()As you can see in the code snippet, we define the schema of the results we want to get from MongoDB and then expose it as a pure Apache Arrow Schema object to GoodData. This ensures that we will not need to convert the results from MongoDB to GoodData-compatible data; we will just pass them along (more details to follow).

Report execution code

Now, we will focus on the part where FlexConnect handles the report execution requests. These correspond to a user opening a report and requesting data for it. First, to make things leaner, we define a simple contextmanager that exposes the “movies” collection and ensures the connection is closed appropriately.

import os

from collections.abc import Generator

from contextlib import contextmanager

from pymongo import MongoClient

from pymongo.synchronous.collection import Collection

CONNECTION_STRING = os.getenv("MONGO_CONN_STRING")

@staticmethod

@contextmanager

def _get_movie_collection() -> Generator[Collection, None, None]:

"""

Get the MongoDB collection with movies and make sure it is closed after use.

"""

client = MongoClient(CONNECTION_STRING)

try:

db = client.get_database("sample_mflix")

yield db.get_collection("movies")

finally:

client.close()Next, we move to the code for the report execution. We connect to the MongoDB database and query it for the necessary information. We use the DbSchema defined earlier to make MongoDB return the data directly in the format we want to pass to GoodData (remember, we made those two schemas identical for this very reason).

import gooddata_flight_server as gf

from gooddata_flexconnect import ExecutionContext, ExecutionType

def call(

self,

parameters: dict,

columns: Optional[tuple[str, ...]],

headers: dict[str, list[str]],

) -> gf.ArrowData:

execution_context = ExecutionContext.from_parameters(parameters)

if execution_context.execution_type == ExecutionType.REPORT:

with self._get_movie_collection() as collection:

return collection.find_arrow_all(

# We can pass the filters directly to the find_arrow_all method

# to optimize the query and avoid unnecessary data transfer.

query=self._report_filters_to_mongo_query(

execution_context.report_execution_request.filters,

execution_context.timestamp,

),

projection={

# We can project fields as they are in the MongoDB collection

"title": "$title",

"rated": "$rated",

"released": "$released",

# We can project nested fields as well

"critic_rating": "$tomatoes.critic.meter",

"viewer_rating": "$tomatoes.viewer.meter",

},

schema=self.DbSchema,

)

elif execution_context.execution_type == ExecutionType.LABEL_ELEMENTS:

... # will be discussed in the next snippetYou may have noticed that we also define the query to limit the number of results based on the filters from the execution request. While this is optional, it is a good practice as it can make things significantly faster instead of returning data from all the documents all the time. This will be discussed later in a separate chapter. The important thing to note here, though, is that even if you do not filter the data, GoodData will still ensure all the execution filters are applied correctly, so filtering here is mainly a means of optimizing things.

Label elements code

The other type of execution we need to handle is requests for label elements. For example, all the different ratings of all of the movies. These are primarily used to populate the UI label filter pickers. Basically, we get a label’s id, and we should return all the labels’ distinct values in our data.

import gooddata_flight_server as gf

import pyarrow

from gooddata_flexconnect import ExecutionContext, ExecutionType

def call(

self,

parameters: dict,

columns: Optional[tuple[str, ...]],

headers: dict[str, list[str]],

) -> gf.ArrowData:

execution_context = ExecutionContext.from_parameters(parameters)

if execution_context.execution_type == ExecutionType.REPORT:

... # see the previous snippet for the implementation

elif execution_context.execution_type == ExecutionType.LABEL_ELEMENTS:

with self._get_movie_collection() as collection:

# Get the label we want to get elements for.

# No need for mapping here: the label has the same name as the field in the MongoDB collection.

label = execution_context.label_elements_execution_request.label

# We can use the distinct method to get unique values of a field.

# There is unfortunately no Arrow-native way to do this, so we need to convert the result to a table.

elems = collection.distinct(

key=label,

filter=self._elements_request_to_mongo_query(

execution_context.label_elements_execution_request

),

)

# Add None to the list of elements to represent the null value:

# this will not be returned by the distinct method because it is a part of an index.

elems.append(None)

# We need to return a table with a single column with the label elements.

# This needs to be a subset of the schema we advertise to GoodData.

return pyarrow.Table.from_pydict(

{label: elems},

schema=pyarrow.schema({label: pyarrow.string()}),

)This time, we cannot use a pymongoarrow API as there seems to be no counterpart to the distinct operation, so we need to convert the results to an Apache Arrow Table manually. Taking advantage of the fact that all the labels in our dataset are strings, we can construct the simple one-column schema easily.

Similar to the report execution, you can see we use a filter expression to limit the data transferred by reflecting the user filters on the returned elements (typically what they wrote in a search box). This will be discussed later, but as in the report execution case, even if you skip this, GoodData will apply all the filters anyway.

In action within GoodData

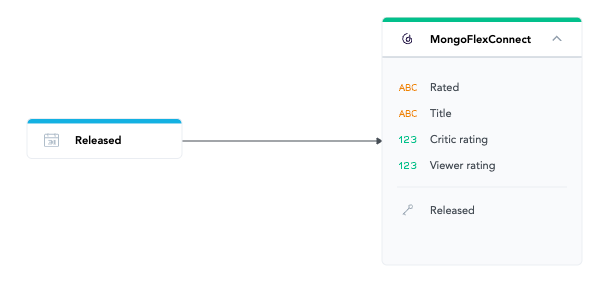

Once the code is written and FlexConnect is deployed, we can connect it to GoodData and use it for analytics. Using either the UI or the API, we can create a new data source of the FLEXCONNECT data source type. Then, using the Logical Data Modeler, we can add our FlexConnect function dataset to the LDM.

View of the Logical Data Model

And that is it. Now, we can use the Analytical Designer to create some interesting reports. For example, we can investigate how much the viewer and critic ratings differ based on the release month among PG-13-rated movies in the ‘90s.

Sample report using the FlexConnect data from MongoDB

Optimizing the data transfers

This section covers the additional steps you can take to make your FlexConnect function more efficient. This is optional, but beneficial. We will cover how to limit the data transferred during the MongoDB communication by leveraging the filters specified in the execution definitions. Keep in mind that the optimizations do not have to be perfect, you can take an iterative approach to them, and GoodData will still ensure all the data is displayed correctly every time.

Label elements

For label elements, we can keep things simple by respecting the search query the user typed into the search box. This can significantly reduce the data transferred, especially for labels that have many different elements.

We will show you only some of the optimizations here. For complete code and examples of these optimizations, check out our GitHub repository. This will help you dive deeper into the implementation details and adapt the techniques to your use case.

from gooddata_flexconnect import LabelElementsExecutionRequest

@staticmethod

def _elements_request_to_mongo_query(

request: LabelElementsExecutionRequest,

) -> dict[str, dict]:

"""

Convert GoodData label elements request to MongoDB query.

"""

query = {}

if request.pattern_filter:

query[request.label] = {

"$regex": request.pattern_filter,

"$options": "i", # the search should be case-insensitive

}

return queryReport execution

For report executions, the filter variability is a bit greater as there are quite a few filter types. However, we can only focus on the ones we expect to be used the most or those that can have the biggest impact.

The snippet in the GitHub repo shows how to reflect attribute and date filters in the MongoDB queries for report executions. We use the standard MongoDB query operators and fill them with the appropriate values.

Do More With FlexConnect

Bridging the gap between the semi-structured world of document databases and the structured world of BI tools is not a trivial task. In this article we have shown that using GoodData FlexConnect, doing so is straightforward with a bit of Python code. It offers great flexibility and is easy to get started with.

Learn More

FlexConnect is built for developers and companies looking to streamline the integration of diverse data sources into their BI workflows. It gives you the flexibility and control you need to get the job done with ease.

Explore detailed use cases like connecting APIs, running local machine learning models, handling semi-structured NoSQL data, streaming real-time data from Kafka, or integrating with Unity Catalog — each with its own step-by-step guide.

Want the bigger picture? Check out our architecture article on FlexConnect, or connect with us through our Slack community for support and discussion.