FlexConnect: Cross-tenant Benchmarking

Written by Dan Homola |

Table of Contents

It is only natural that people and organizations tend to compare themselves to others: it can drive positive change and improvement. For BI solutions that operate on data for data of many (often competing) tenants, it can be a valuable selling point to allow the tenants to compare themselves against others. These can be different businesses, departments in the same business, or even individual teams.

Since the data of each tenant is often sensitive and proprietary to each tenant, we need to take some extra steps to make the comparison useful without outright releasing the other tenant’s data. In this article, we describe the challenges unique to benchmarking and illustrate how the GoodData FlexConnect data source can be used to overcome them.

Benchmarking and its challenges

There are two aspects we need to balance when implementing a benchmarking solution:

- Aggregating data across multiple peers

- Picking only relevant peers

First, we need to aggregate the benchmarking data across multiple peers so that we do not divulge data about any individual peer. We must choose an appropriate granularity (or granularities) on which the aggregation happens. This is very domain-specific, but some common granularities to aggregate peers are:

- Geographic: same country, continent, etc.

- Industry-based: same industry

- Aspect-based: same property (e.g. public vs private companies)

Second, we need to pick peers that are relevant to the given tenant: comparing to the whole world at once is very rarely useful. Instead, the chosen peers should be in the “same league” as the tenant that is doing the benchmarking. There can also be compliance concerns at play: some tenants can contractually decline to be included in the benchmarks, and so on.

All of this can make the algorithm to choose the peers very complex: often too complex to implement using traditional BI approaches like SQL. We believe that GoodData FlexConnect is a good choice to implement the benchmarking instead. Using Python to implement arbitrarily complex benchmarking algorithms while plugging seamlessly into GoodData as “just another data source”.

What is FlexConnect

FlexConnect is a new way of providing data to be used in GoodData. I like to think of it as “code as a data source” because that is essentially what it does - it allows using arbitrary code to generate data and act as a data source in GoodData.

The contract it needs to implement is quite simple. The FlexConnect gets an execution definition and its job is to return a relevant Apache Arrow Table. There is our FlexConnect Architecture article that goes into much more detail, I highly recommend reading it next.

For the purpose of this article, we will focus on the code part of the FlexConnect, glossing over the infrastructure side of things.

The project

To illustrate how FlexConnect can serve benchmarking use cases, we will use the same project available in the GoodData Trial. It consists of one “global” workspace with data for all the tenants and then several tenant-specific workspaces.

We want to extend this solution with a simple benchmarking capability using FlexConnect so that tenant workspaces can compare themselves to one another.

More specifically, we will add the capability to benchmark the average amount of returns across the different product categories. We will pick the peers by comparing their total number of orders and will pick those competitors that have a similar number of orders as the tenant running the benchmarking.

The solution

The solution uses a FlexConnect to select the appropriate peers based on the selected criteria and then runs the same execution against the global workspace with an extra filter making sure that only the peers are used.

The schema of the data returned by the function makes sure that no individual peer can be visible: there simply is not a column that would hold that information. Let’s dive into the relevant details.

The FlexConnect outline

The main steps of the FlexConnect is as follows:

- Determine which tenant corresponds to the current user

- Use a custom peer selection algorithm to select appropriate peers to get the comparative data

- Call the global workspace in GoodData to get the aggregate data using the peers from the previous step

The FlexConnect returns data conforming to the following schema:

import pyarrow

Schema = pyarrow.schema(

[

pyarrow.field("wdf__product_category", pyarrow.string()),

pyarrow.field("mean_number_of_returns", pyarrow.float64()),

]

)As you can see, the schema returns a benchmarking metric sliced by individual product categories. This gives us very strict control about which granularities of the benchmarking data we want to allow: there is no way a particular competitor would leak here.

You might wonder why the product category column has such a strange name. This name will make it much easier to reuse existing Workspace Data Filters (WDF), as they use the same column name - we discuss it later in the article.

Current tenant detection

First, we need to determine which tenant is the one we are choosing the peers for. Thankfully, each FlexConnect invocation receives the information about which workspace it is being called from. We can use this to map the workspace to the tenant it corresponds to.

For simplicity’s sake, we use a simple lookup table in the FlexConnect itself, but this logic can be as complex as necessary – in real life scenarios, this is often stored in some data warehouse and you could query for this information (and possibly caching it).

import gooddata_flight_server as gf

TENANT_LOOKUP = {

"gdc_demo_..1": "merchant__bigboxretailer",

"gdc_demo_..2": "merchant__clothing",

"gdc_demo_..3": "merchant__electronics",

}

# This is the API you need to implement, the result has to adhere to the Schema you defined earlier

def call(

self,

parameters: dict,

columns: Optional[tuple[str, ...]],

headers: dict[str, list[str]],

) -> gf.ArrowData:

execution_context = ExecutionContext.from_parameters(parameters)

tenant = TENANT_LOOKUP.get(execution_context.workspace_id)

peers = self._get_peers(tenant)

return self._get_benchmark_data(

peers, execution_context.report_execution_request

)Peer selection

With the current tenant known, we can then select the peers for the benchmarking. We use a custom SQL query, which we run against the source database. This query selects peers that have similar values in the number of orders (we consider competitors that have 80-200% the amount of our order quantity). Since the underlying database is Snowflake, we use the Snowflake-specific syntax to inject the current tenant into the query.

Please keep in mind that the fact we use SQL here is meant to illustrate that the peer selection can use any algorithm you want and can be as complex as needed based on business or compliance needs. E.g., it could contact some external API.

import os

import snowflake.connector

def _get_connection(self) -> snowflake.connector.SnowflakeConnection:

... # omitted for brevity

def _get_peers(self, tenant: str) -> list[str]:

"""

Get the peers that have comparable number of orders to the given tenant.

:param tenant: the tenant for which to find peers

:return: list of peers

"""

with self._get_connection() as conn:

cursor = conn.cursor()

cursor.execute(

"""

WITH PEER_STATS AS (

SELECT COUNT(*) AS total_orders,

"wdf__client_id" AS client_id,

IFF("wdf__client_id" = %s, 'current', 'others') AS client_type

FROM TIGER.ECOMMERCE_DEMO_DIRECT."order_lines"

GROUP BY "wdf__client_id", client_type

),

RELEVANT_PEERS AS (

SELECT DISTINCT others.client_id

FROM PEER_STATS others CROSS JOIN PEER_STATS curr

WHERE curr.client_type = 'current'

AND others.client_type = 'others'

AND curr.total_orders BETWEEN others.total_orders * 0.8 AND others.total_orders * 2

)

SELECT * FROM RELEVANT_PEERS

""",

(tenant,),

)

record = cursor.fetchall()

return [row[0] for row in record]Benchmarking data computation

Once we have the peers ready, we can query the global GoodData workspace for the benchmarking data. We can take advantage of the fact that we get the information about the original execution definition passed to the FlexConnect when invoked.

This allows us to keep any filters applied to the report: without this, the benchmarking data would be filtered differently, rendering it meaningless. The relevant part of the code looks like this:

import os

import pyarrow

from gooddata_flexfun import ReportExecutionRequest

from gooddata_pandas import GoodPandas

from gooddata_sdk import (

Attribute,

ExecutionDefinition,

ObjId,

PositiveAttributeFilter,

SimpleMetric,

TableDimension,

)

GLOBAL_WS = "gdc_demo_..."

def _get_benchmark_data(

self, peers: list[str], report_execution_request: ReportExecutionRequest

) -> pyarrow.Table:

# GoodPandas = GoodData -> Pandas

pandas = GoodPandas(os.getenv("GOODDATA_HOST"), os.getenv("GOODDATA_TOKEN"))

(frame, metadata) = pandas.data_frames(GLOBAL_WS).for_exec_def(

ExecutionDefinition(

attributes=[Attribute("product_category", "product_category")],

metrics=[

SimpleMetric(

"return_unit_quantity",

ObjId("return_unit_quantity", "fact"),

"avg",

)

],

filters=[

*report_execution_request.filters,

# add a filter limiting the scope to the peers

PositiveAttributeFilter(ObjId("client_id", "label"), peers),

],

dimensions=[

TableDimension(["product_category"]),

TableDimension(["measureGroup"]),

],

)

)

frame = frame.reset_index()

frame.columns = ["wdf__product_category", "mean_number_of_returns"]

return pyarrow.Table.from_pandas(frame, schema=self.Schema)Changes to LDM

Once the FlexConnect is running somewhere reachable from GoodData (e.g., AWS Lambda), we can connect the FlexConnect as a data source.

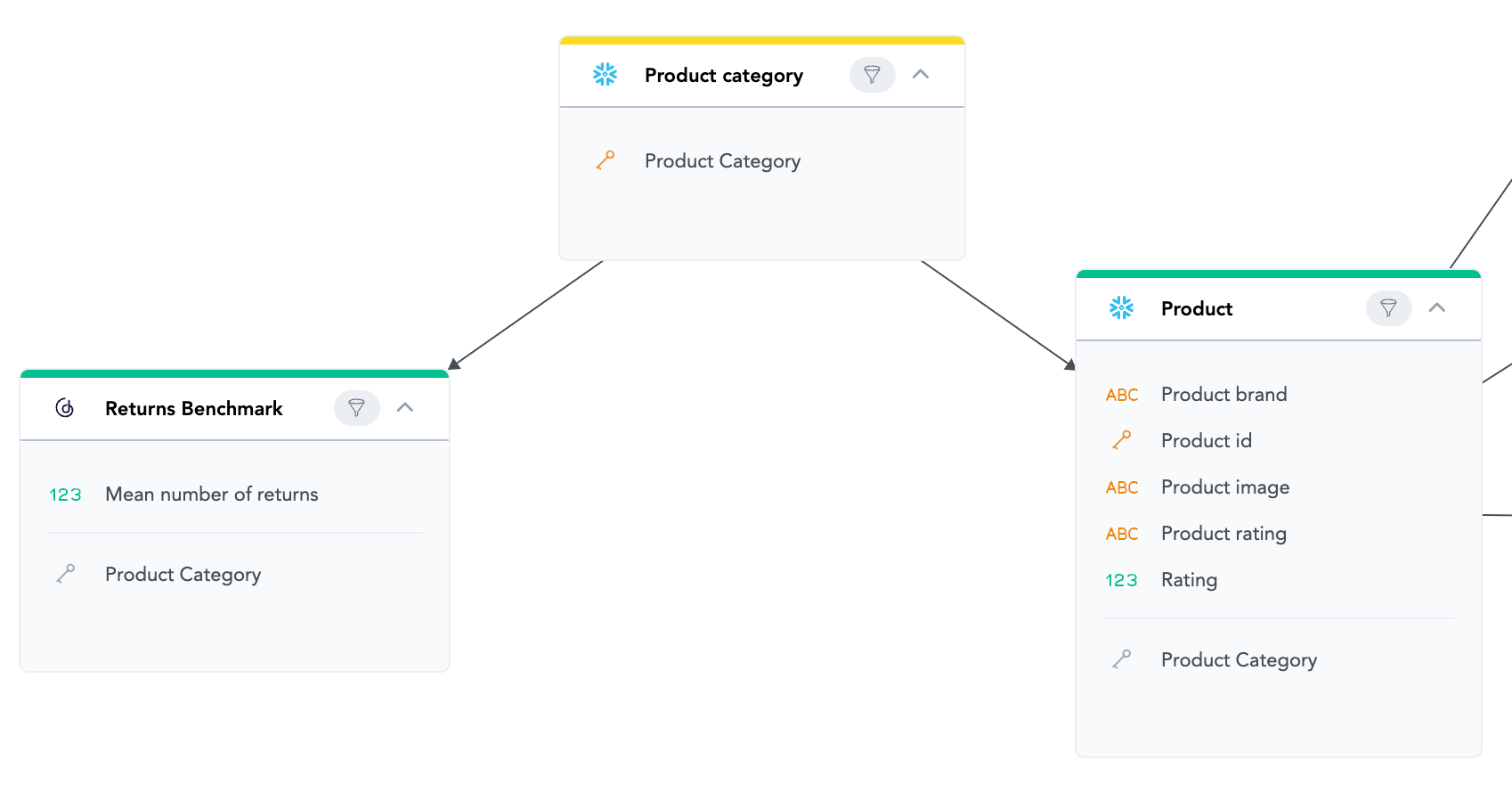

To be able to connect the dataset from it to the rest of the logical data model, we need to make two changes to the existing model first:

- Promote product category to a standalone dataset

- Apply the WDF that exists on the product category to new and benchmarking datasets

Since our benchmarking function is sliceable by product category, we need to promote product category to a stand alone dataset. This will allow it to act as a bridge between the benchmarking dataset and the rest of the data.

We need to apply the WDF that exists on the product category in the model to both the new and the benchmarking datasets. This ensures the benchmark will not leak product categories available to some of the peers but not to the current tenant. This also shows how seamlessly the FlexConnects fit into the rest of GoodData: we treat them the same way we would treat any other dataset.

Let’s have a look at the before and after screenshots of the relevant part of the logical data model (LDM).

LDM before the changes

LDM after the changes

In Action

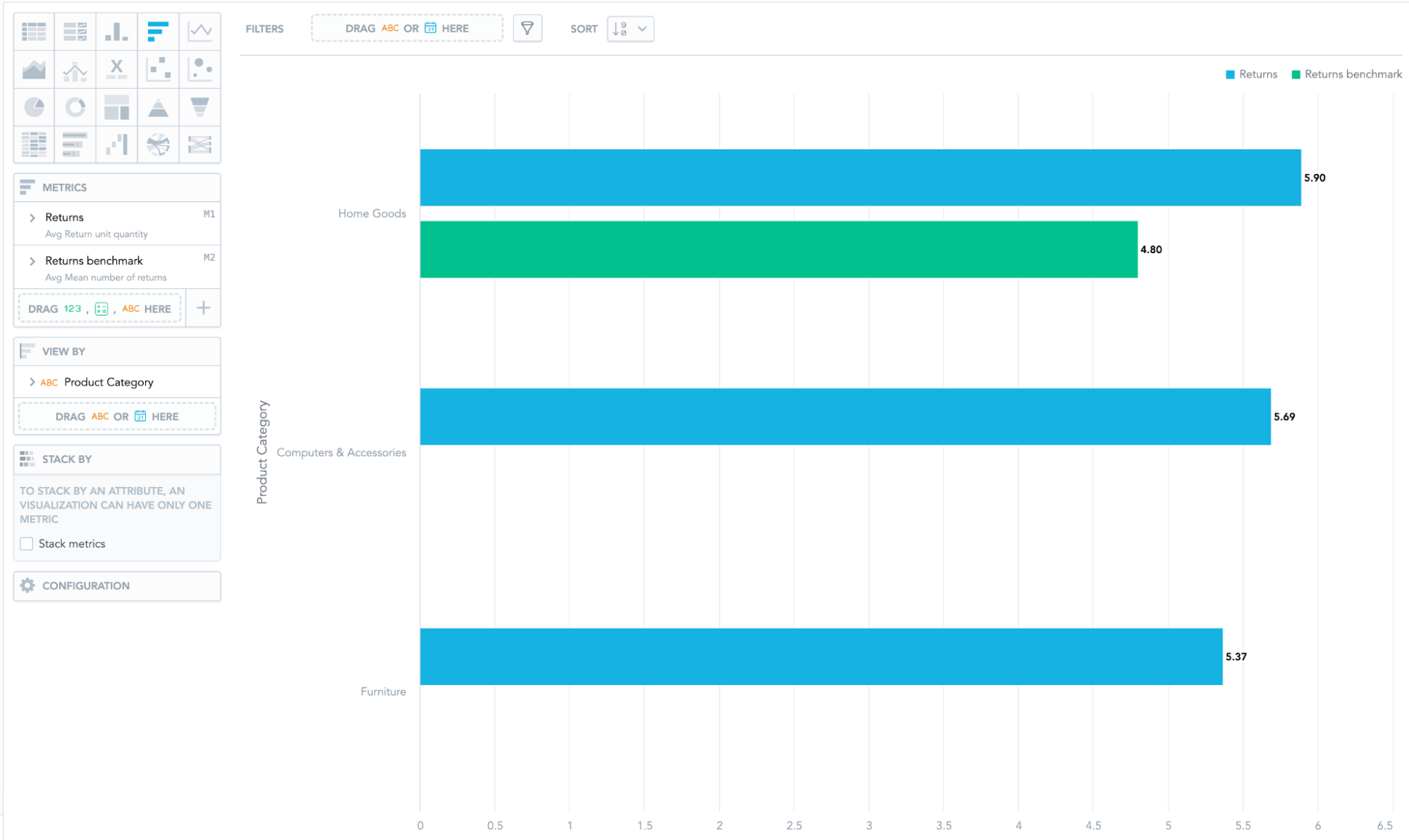

With these changes in place, we can finally use the benchmark in our analytics! Below is an example of a simple table comparing the returns of a given tenant to its peers.

Example benchmarking insight

In this particular insight, the tenant sees that their returns for Home Goods are a bit higher than those of their peers, so maybe there is something to be investigated there.

There is no data for some of the product categories, but that is to be expected: sometimes there are no relevant peers for a given category, so it is completely fine that the benchmark returns nothing for it.

Summary

Benchmarking is a deceptively complicated problem: we must balance the usefulness of the values with compliance to the confidentiality principles. This can prove to be quite hard to implement in traditional data sources. We have outlined a solution based on FlexConnect that offers much greater flexibility both in the peer selection process and the aggregated data computation.

Want to Learn More?

If you want to learn more about GoodData FlexConnect, I highly recommend you read the aforementioned architectural article.

If you’d like to see more of FlexConnect in action, check out our machine learning or NoSQL articles.

Written by Dan Homola |