Extending a CI/CD Data Pipeline With Meltano

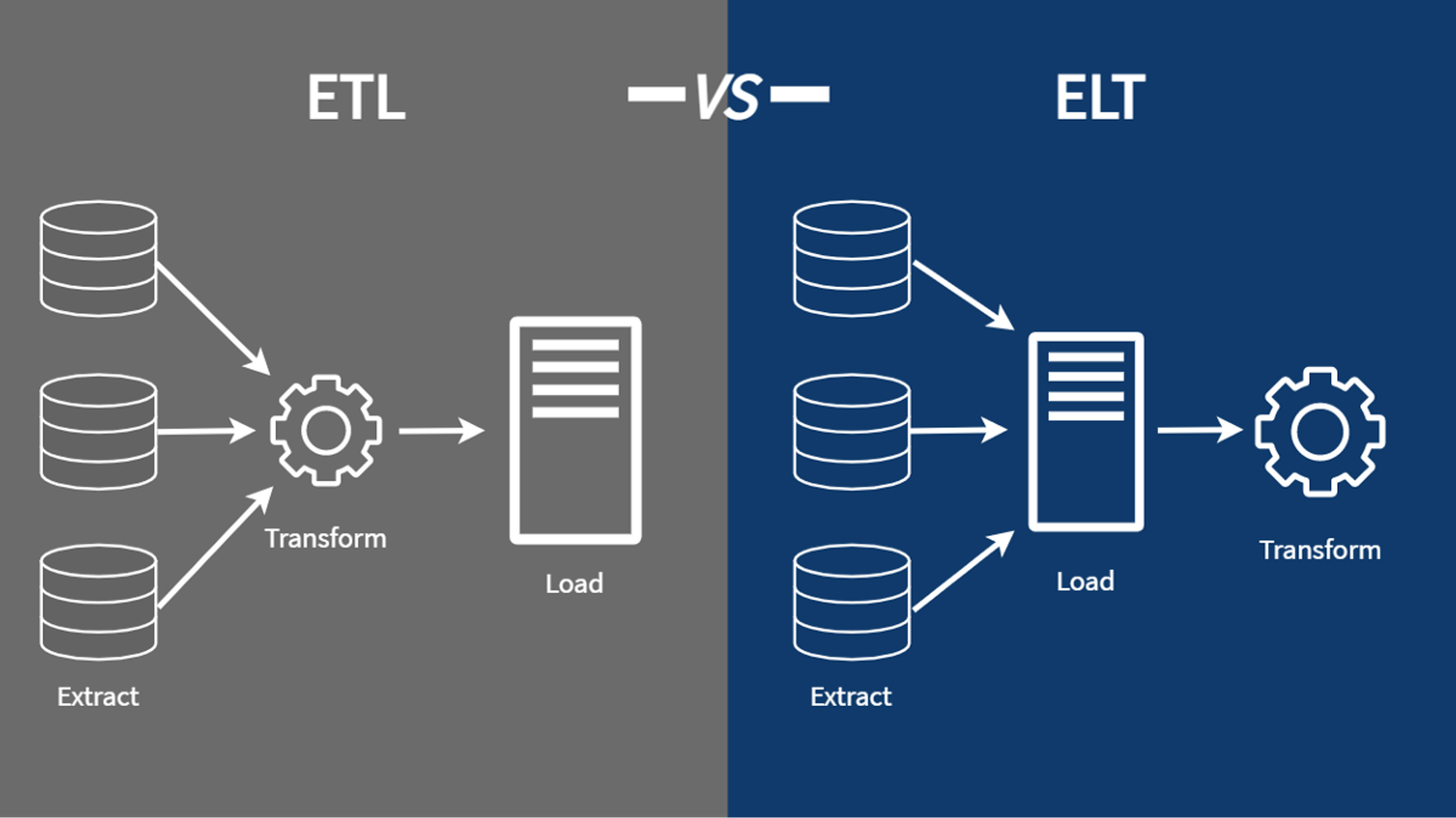

What the Hack Is the "EL" in ELT?

ELT (Extract-Load-Transform) consolidates data from any data sources into a central data warehouse (data lake, lakehouse).

The "EL" part is responsible for extracting data from various sources and loading them into a data warehouse. The "T" part is responsible for transforming loaded tables into a data model ready for analytics use cases.

In the past, it was called ETL, because databases were not capable of transforming large data volumes, but that is no longer the case; Clustered columnar MPP databases like Snowflake, BigQuery, Redshift or Vertica can do the transformation job.

Removing Custom EL From Our Pipeline

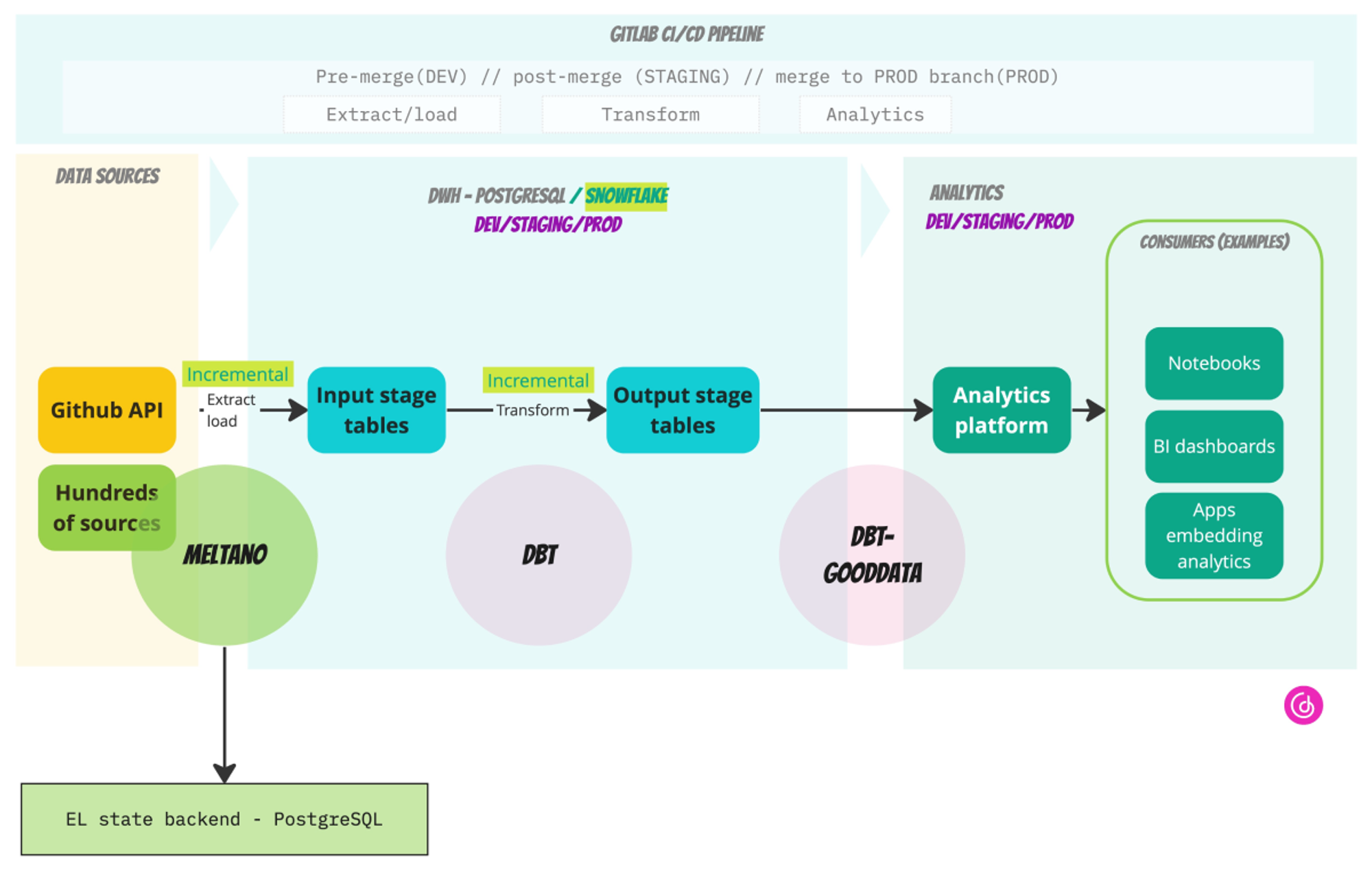

Once upon a time, I implemented a (CI/CD) data pipeline and Patrik Braborec described it in the nice article How to build a modern data pipeline. In the demo, I decided to crawl through source data from a Github REST API and load them into a PostgreSQL database. For this purpose, I implemented a custom Python tool.

I knew that it was just a temporary solution, because:

- Extending/maintaining a custom solution is always costly.

- There are already mature open-source platforms available that do it better.

With new requirements somehow sneaking their way into my backlog, I decided the hour of reckoning hath arrived to replace the custom EL solution.

Picking the Right Tool for the Use Case

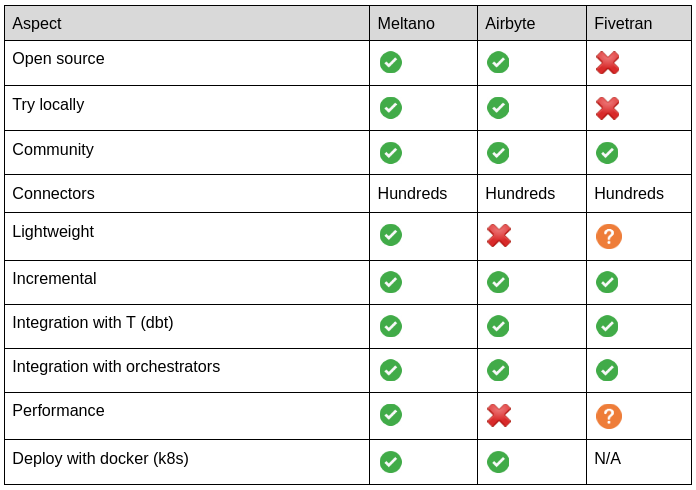

I considered the following aspects while creating the short list of matching EL tools:

I quickly removed Fivetran from the list, because it is a closed source, cannot be evaluated locally, and provides only a 14-day trial.

Finally, I picked Meltano over Airbyte especially because:

- Meltano is significantly more lightweight.

- Simple fast CLI (Meltano) over quite heavy docker-compose consisting of multiple JVM services.

- Meltano performs better.

- Less overhead, a shorter time to finish the same pipeline.

- Meltano is the most open ecosystem, powered by the singer.io standard.

- Recently they even adopted all Airbyte connectors (still experimental, but impressive!).

The New Solution With Meltano

Generally, the introduction of Meltano opens up many new opportunities, such as:

- Extracting data from hundreds of sources.

- Loading the data into various DWH-like targets (and other types of targets).

- Incrementally extracting/loading.

- Implementing new connectors.

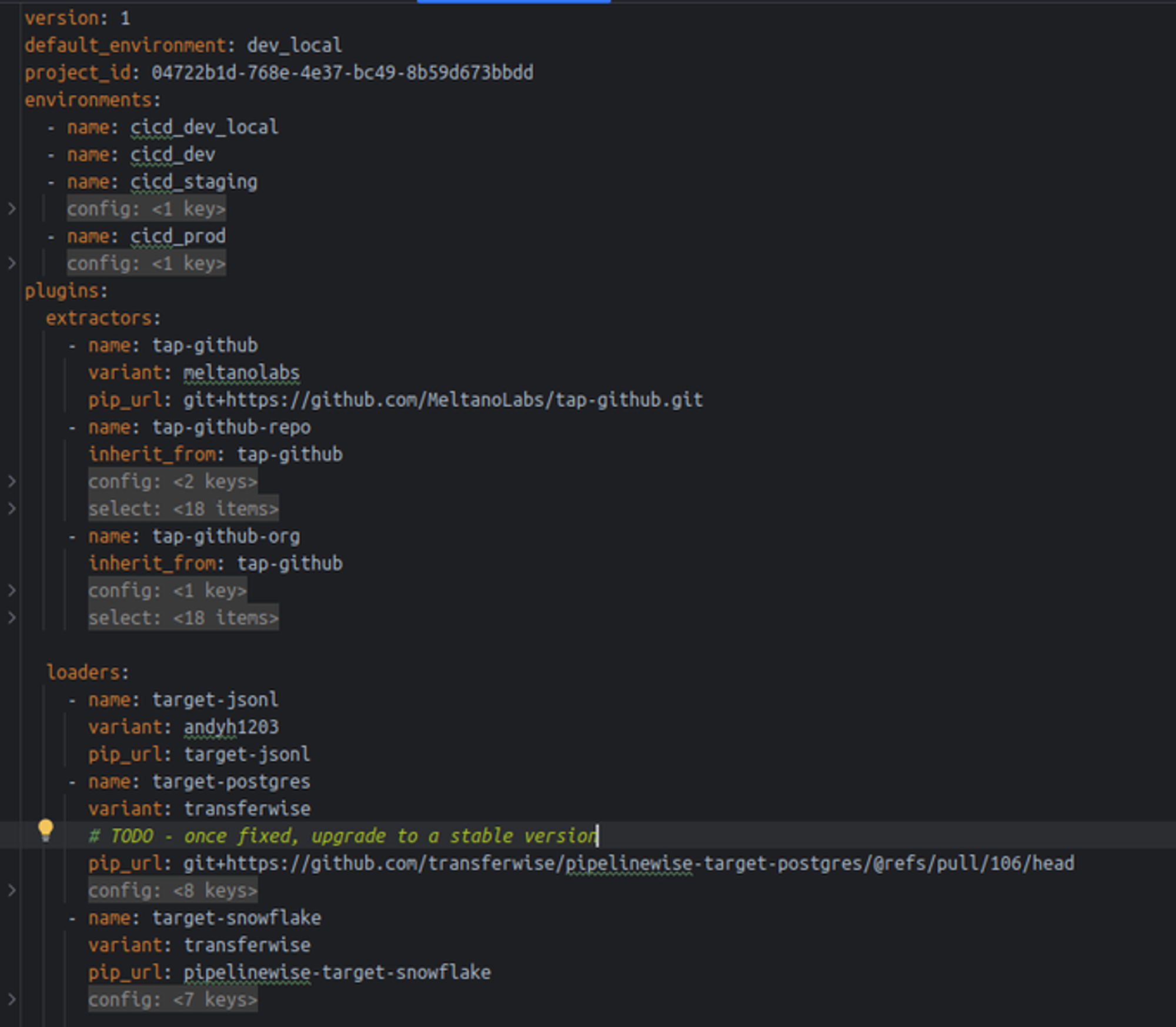

Generally, the only thing I had to implement was the following config file:

The complete source code of the demo can be found here.

Onboarding

First of all, the Meltano (Slack) community rocks! I introduced myself and got feedback immediately. I started posting issues and the response was always quick.

Evaluating Meltano locally (on my laptop) was seamless. I easily incorporated Meltano into my pipeline (with dbt and GoodData), running everything directly from a virtualenv or inside a docker-compose.

I followed the official tutorial, which coincidentally integrates exactly what I implemented in-house — extracts data from GitHub and loads them into PostgreSQL. Unfortunately, the tutorial solution did not work out of the box. After a few discussions in Slack, I fixed the related configuration and had to freeze the version of target-postgres to an older stable version.

My recommendations to the Meltano community would be to:

- Polish the official documentation so it works out of the box.

- Link fully working versions of used extractors/loaders.

- Improve error reporting, for example, when I misconfigured the extractors/loaders, Meltano failed with quite cryptic error messages (including full stack traces).

Experience GoodData in Action

Discover how our platform brings data, analytics, and AI together — through interactive product walkthroughs.

Explore product toursConfiguration

First, let me just briefly complain that I don’t like Meltano’s CLI-first philosophy interactivity. Instead, I would prefer if, when adding extractors/loaders, a relevant part of the meltano.yml config would be bootstrapped with all mandatory fields (and even with their brief documentation).

Coincidentally, a related discussion just started in the following Github issue — CLI -> YAML proposal.

It is possible to share properties between Meltano, dbt, and GoodData configs using environment variables. Environment variables can be also used to override default settings in the config file. The Meltano configuration flexibility is documented here.

Moreover, it is possible to inherit extractor/load configs. This is useful when you want to specify multiple extractors/loaders of the same type (I utilized it for Github org-level and repo-level extracts).

Perfect developer experience!

Openness

I briefly reviewed available connectors:

- Taps aka extractors

- Targets aka loaders

Out of curiosity, I tried a couple of them. In the case of extractors, there is a very handy target-jsonl for debugging. It reminded me of the days when I was a kid and I went to a toy store. So many attractive opportunities!

Moreover, Meltano provides an SDK. It seems to be extremely easy to build a new tap or target!

However, everything comes with its price, and here we pay with stability. Many connectors are unstable. The support in the community is great, and if feasible, you can fix anything quickly. Sometimes you have to roll back to an older version. Sometimes, you have to use an undocumented configuration option.

It opens the question if Meltano is production-ready. I will get back to this in the conclusion.

Easy To Use, Easy To Extend

In particular, I had to migrate from AWS RDS (PostgreSQL— I lost public access to the instance) to Snowflake. A few minutes of work, both in Meltano and dbt!

I also wanted to switch to incremental mode. A few minutes of work in Meltano! Then, I had to rewrite dbt models, which took a little bit longer, but still, it was very easy.

Finally, I had to incorporate Meltano into the CI/CD pipeline. I tried to utilize the official Meltano docker image, but it did not work for me. I didn’t find a way to attach the required taps/targets to the container. So I prepared a custom (simple) Dockefile and easily incorporated it into the GitLab pipeline(extract/load job).

Moreover, I created a custom Dockerfile for dbt and GoodData tools, so all jobs in the GitLab pipeline are based on tailored images. It will allow me to move forward to an even more robust production-like environment.

CI/CD and the Way to Production-Like Deployment

I discussed this topic with Aaron Phethean in the community and then even face-to-face (well… via Zoom).

Aaron kindly shared with me a few resources for inspiration:

The resources prove that there is a straightforward path to a production-like deployment, and it is aligned with industry standards.

I am going to focus on this topic in the future (check the last chapter).

Performance

CLI

What surprised me is the overhead when executing Meltano CLI. It takes up to a few seconds before the real work (extracting/loading) starts. The overhead is acceptable, but a little bit annoying when developing locally. dbt suffers from this issue too, but my personal experience is that Meltano’s overhead issue is slightly worse.

Incremental Extract/Load (and Transform as Well)

I decided to make the whole data pipeline incremental — something I struggled to implement in the former in-house solution.

Meltano provides first-class support for incremental extracts and loads. It is implicitly available in each tap/target, you don’t have to implement anything. It is possible to force full-refresh.

dbt provides the same functionality for transforms, but the complexity of the use case is higher, so it requires some implementation. You have to add corresponding configurations/macros into models and sometimes you have to think deeper about how to apply increments, especially if you pre-calculate some metrics.

Targets

I investigated how the data is loaded into targets:

Snowflake — a standard (well-performing) way is used.

- Upload files to a stage, COPY from the stage to the DB.

PostgreSQL — INSERT values (batch of values) way is used.

- This could be optimized, COPY command is much more efficient.

- Based on discussions in the community, it should be very easy to optimize the loader (or fork it).

Generally, it should be easy to trigger hooks adding e.g. indexes or partitioning on loaded tables (which can help to performance of the following transformations).

Meta Store (State Backend)

It is necessary to persist the state (job runs, state of increments, etc.). Meltano provides multiple options:

Local Sqllite — no option for CI/CD

PostgreSQL — I lost access to our public AWS RDS ;-(

- I searched for a forever-free offering on free-for.dev.

- Unfortunately, CockroachDB does not work with Meltano.

- bit.io PostgreSQL is the only one I found.

AWS S3 state backend – I want to migrate to this option in the future because it is easier and cheaper than to keep running a PostgreSQL instance.

Conclusion

Meltano has great potential because of the singer.io standard and because of its community.

The recent adoption of Airbyte connectors is worth mentioning.

Personally, once I introduced Meltano into my pipeline, I felt like the whole world of data was open, eagerly awaiting me to design new analytics use cases and allowing me to easily get the pertinent data into the stack.

The stability of connectors is unfortunately not good enough yet, and onboarding is quite tough because of that. But if developers overcome issues during the initial onboarding, if they freeze versions of the connectors, and if they make controlled upgrades, it can be stable enough for production usage.

Regarding performance — not all of the connectors are optimized. But again, due to the openness of the ecosystem, it is easy to embed various hooks optimized for particular extractors/loaders.

There is also a cloud offering coming soon — https://meltano.com/cloud/. What can we expect?

Next steps

What can you expect from me in the future? How do I plan to extend the demo and write new articles about it? My ideas are:

Add more data sources

Demonstrate a realistic use case, for example, internal analytics for software companies analyzing data from Github/Gitlab, Jira, Splunk, Prometheus, …

Production-like deployment

- Do not run Meltano/dbt in GitLab workers.

- Deploy PODs, Cronjobs, Configmaps, … into Kubernetes.

- Even implement a simple Kubernetes operator(using Kopf) for this purpose.

Orchestration

- Utilize e.g. Dagster or Airflow to orchestrate the whole pipeline.

- Expose the orchestrator UI to allow developers to investigate the complexity of the pipeline (dependencies, …), jobs history, etc.

End-to-end multi-tenancy

- It has already been discussed in the Meltano Slack community.

- Run Meltano/dbt PODs per customer, each with a custom configuration.

- GoodData is already multi-tenant by design.

Want To Try It for yourself?

If you want to try Meltano integration by yourself, register for the free GoodData trial and try to set up the whole process in the demo repository.

Experience GoodData in Action

Discover how our platform brings data, analytics, and AI together — through interactive product walkthroughs.

Explore product toursGoodData.CN (Community Edition) is used for the local development in this article. Since GoodData.CN version 3.0, you need a license key. If you are interested in GoodData.CN, please contact us.