DuckDB Meets Apache Arrow

Written by Jan Kadlec |

Table of Contents

This article is a part of a series about FlexQuery and the Longbow engine powering it.

You may have heard about DuckDB, Apache Arrow, or both. In this article, I’ll tell you about how we (GoodData) are the first analytics (BI) platform powered by the combination of these technologies. I believe the motivation is evident – performance 🏎️ and developer velocity.

Also, to answer the question about DuckDB I found on Reddit, yes, we are using it.

This whole combination was possible thanks to the FlexQuery and the Longbow engine behind it. If you would like to learn more about them, see the other parts of the series, like the FlexQuery introduction, or the architecture of the Longbow project.

A brief introduction to DuckDB

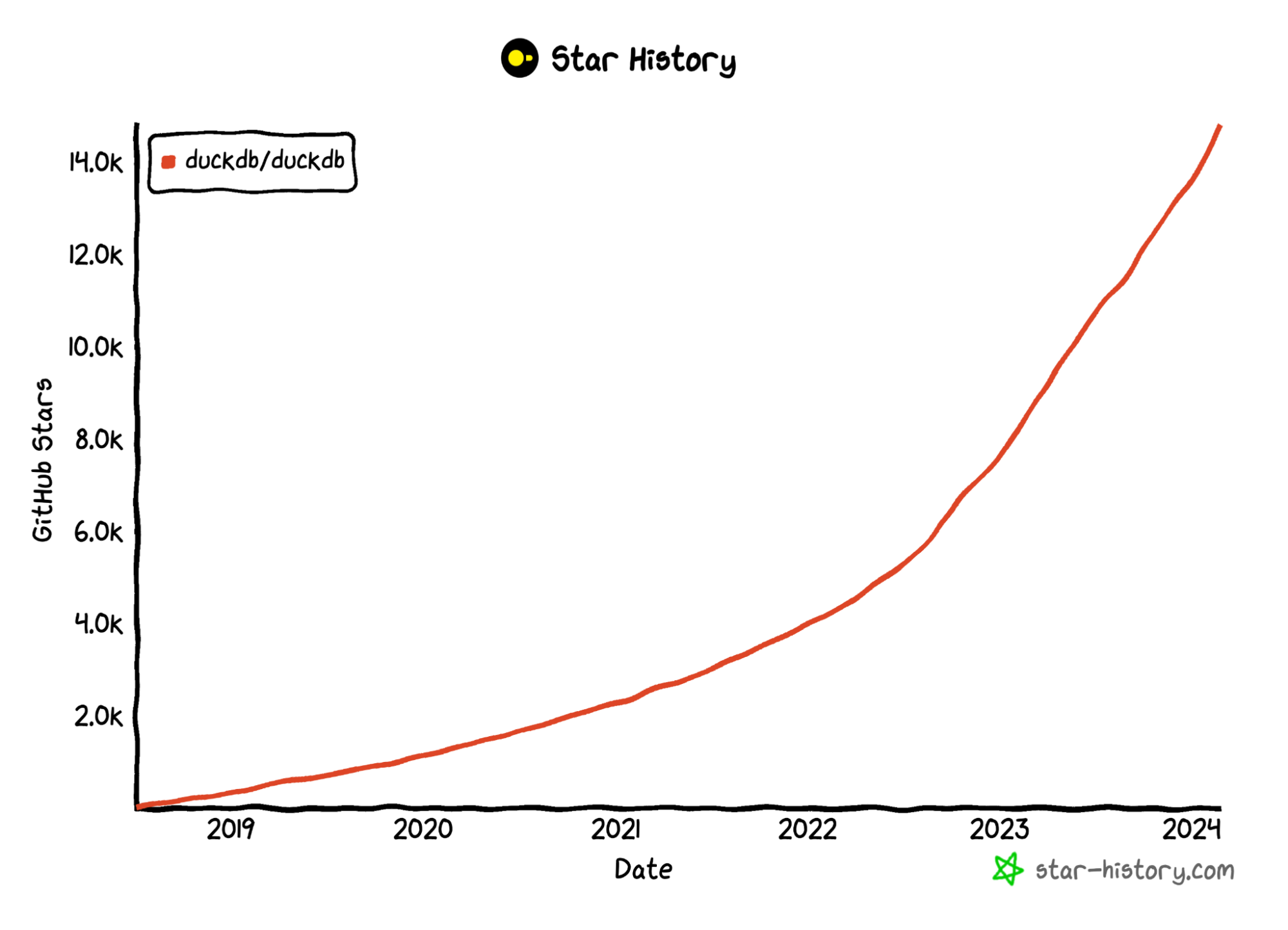

DuckDB is an open-source, in-process analytical database. That has a tremendous community, and a product called MotherDuck is built on top of it. It is fast and feature-rich. In addition, it provides advanced features that help engineers in their day-to-day routine. Let me give you a quick example.

ATTACH 'sqlite:sakila.db' AS sqlite;

ATTACH 'postgres:dbname=postgresscanner' AS postgres;

ATTACH 'mysql:user=root database=mysqlscanner' AS mysql;

CREATE TABLE mysql.film AS FROM sqlite.film;

CREATE TABLE postgres.actor AS FROM sqlite.actor;

SELECT first_name, last_name

FROM mysql.film

JOIN sqlite.film_actor ON (film.film_id = film_actor.film_id)

JOIN postgres.actor ON (actor.actor_id = film_actor.actor_id)

WHERE title = 'ACE GOLDFINGER';One of the latest advanced features is attaching multiple databases such as Postgres or MySQL and querying on top of them. Link to the blog post presenting the feature.

DuckDB also has a flexible extension mechanism that allows for dynamic loading extensions. Extensibility, in general, is a great thing, and it shows that the technology is developer-friendly.

A Brief introduction to Apache Arrow

Apache Arrow is an open-source development platform for in-memory analytics. It provides a standardized, language-independent columnar memory format for flat and hierarchical data, organized for efficient analytic operations on modern hardware. Data movement is based on the efficient FlightRPC protocol. If you'd like to learn more about this protocol, be sure to check out our Arrow Flight RPC 101 article.

The success of Apache Arrow can be proven by its adoption or the ecosystem built around it. Let me give you a quick example. Everyone who works with Python and data knows the Pandas library. The first release of Pandas was in 2008. Since then, Pandas has come a long way, and in 2024, Apache Arrow will become a required dependency of Pandas. Another example is the Polars library, Pandas alternative written in Rust, which utilizes Apache Arrow as the backend from the beginning.

Apache Arrow is undoubtedly an awesome technology. Does it mean everything is bright and shiny with it? Well, not exactly. Even though the technology is excellent, the core of Apache Arrow and the learning curve can be steep for newcomers. This notion is echoed, for example, on Reddit. Not long ago, I was browsing Reddit and stumbled upon a post about PyArrow (Apache Arrow adoption for Python) missing tutorials and resources, which I can confirm, as I’ve experienced this first-hand.

How we utilize these technologies

We utilize Apache Arrow to implement an analytics lake. Initially, we started with a cache (storage) layer between the data warehouse and underlying data for analytics objects (dashboards, visualizations, etc.). Thanks to the modular architecture of our system and Flight RPC protocol, it’s easy to build and deploy data services. They can be in the form of a module or an operation within a module. You can find more detailed information in the Longbow architecture article by Lubomir (lupko) Slivka.

The most convenient data service you might think of is executing SQL directly on caches. We found out that DuckDB is the best fit for this, as it has been compatible with Apache Arrow since 2021. When you read DuckDB documentation, you’ll see that there is a dedicated Arrow extension, although it is optional for integration with Apache Arrow. Importantly, DuckDB supports native integration with Apache Arrow.

import duckdb

import pyarrow as pa

my_arrow_table = pa.Table.from_pydict({'i': [1, 2, 3, 4],

'j': ["one", "two", "three", "four"]})

results = duckdb.sql("SELECT j FROM my_arrow_table").arrow()With DuckDB and Apache Arrow, we can see significant speed and memory efficiency thanks to the zero-copy mechanism enabled by integrating these tools.

Currently, we use a combination of these technologies in the production. The whole magic is hidden in our approach to analytics with CSV files. First, CSVs are uploaded to durable storage like AWS S3, where we perform analysis directly on top of these files. We derive data types, and based on them, we decide whether the column represents an attribute, a fact, or a date. Users can then manually change the data types to their liking. After this, our platform treats CSVs as a standard data source and performs SQL queries using DuckDB. However, this is just the beginning. We plan to utilize the integration of DuckDB and Apache Arrow even more. Stay tuned, as more updates are on their way.

Future

Looking ahead, there are a lot of future steps that can be considered. However, I’d like to highlight only two: Pre-aggregations and data federation.

Pre-aggregations

Imagine having numerous caches, each produced by an SQL query. However, querying a data warehouse is expensive. So, the idea behind pre-aggregations is that SQL queries are analyzed, and the output of the analysis, in the best-case scenario, is a single SQL query - minimizing direct queries to the data warehouse.

Let’s call this the “mother” query, which will be used to query a data warehouse - producing the “mother” cache. We can derive the same results from this cache using DuckDB, for example, as we would by executing SQL queries individually. Pre-aggregations can be further optimized by considering physical and usage stats about caches.

Pretty straightforward, no? Well, not exactly. The hard part is to create the “mother” query. We already utilize Apache Calcite, which helps us assemble and analyze SQL queries, and we could use it in the case of pre-aggregations as well. Or this could be handled by DuckDB as an extension. Or perhaps AI could be involved? We plan to explore all of these approaches.

Data Federation

Data federation relates to pre-aggregations. There are multiple ways to approach data federation. One of them is, for example, using the pre-aggregations mentioned above. Imagine that instead of one “mother” query, you can make multiple of them by pointing to different data sources and then running DuckDB. The other way is to utilize DuckDB's extensions and attach databases.

Want to learn more?

As I mentioned in the introduction, this is part of a series of articles where the GoodData dev team takes you on a journey of how we built our new analytics stack on top of Apache Arrow and what we learned about it in the process.

Other parts of the series are about the Building of the Modern Data Service Layer, details about the flexible storage and caching, Flight RPC 101, and last but not least the Project Longbow!

If you want to see how well it all works in practice, you can try the GoodData free trial! Or if you’d like to try our new experimental features enabled by this new approach (AI, Machine Learning, and much more), feel free to sign up for our Labs Environment.

If you’d like to discuss our analytics stack (or anything else), feel free to join our Slack community!

Written by Jan Kadlec |