Discovering Opportunities for Artificial Business Intelligence

Written by Jan Soubusta |

Table of Contents

Recently, I wrote an article in which I described our strategy in the area of what I called “Artificial Business Intelligence”(ABI). In this article, I would like to share with you how we discovered opportunities in this area and what prototypes we developed to evaluate them. All prototypes are stored in the open-source Github repository. The umbrella Streamlit application is publicly available here.

Discovery of Opportunities

There is nothing worse than when you spend months delivering a new shiny product feature, and you realize that no one needs it. That is why we follow principles defined by Continuous Discovery to identify the most promising opportunities before starting any kind of development.

However, we decided to be pragmatic in this case because everything related to AI is so rapidly changing. We focused on reading articles, discussing the topic with thought leaders, and doing competitive analysis to identify the most promising opportunities.

Lessons learned:

- I had to change my strategy on how I search things on Google — everything older than a month is irrelevant

- Most of our competitors provide just demoware

- The semantic model and API-first approach provide a competitive advantage. It is easier to prompt/fine-tune LLM and integrate everything seamlessly.

In the end, we identified the following most promising opportunities.

Talk to Data

I would say it is just a follow-up for various NLQ/NLG solutions, which were popular some time ago.

The opportunity can be broken down to:

- Explain data: “This report shows a revenue trend in time per month. It …..”

- Answer questions about data: “Between which two months was the highest revenue bump?”

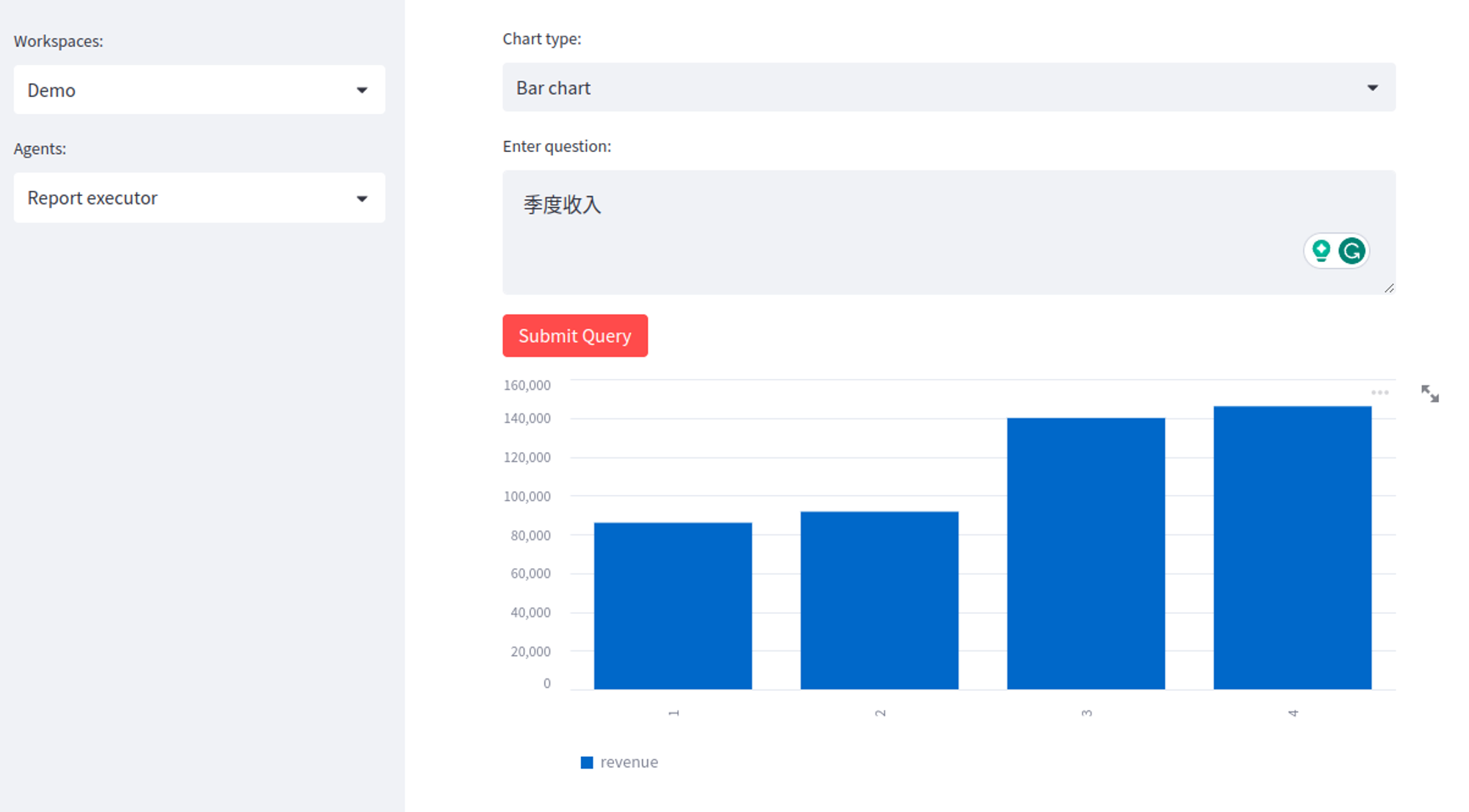

- Full report execution: “Plot a bar chart showing revenue per product type.”

- Generate a complete analytics experience — AI analyses data / semantic models and generates data stories with insights, filters, descriptions, explanations, etc.

Why did we prioritize it?

- It is one of the most often-mentioned opportunities.

- We believe that our differentiators can be leveraged in this case. Specifically the semantic model and API-first approach combined with LLM.

Source Code Generation

Although there is incredible hype regarding source code generation (Github Copilot, Starcoder, etc.), we quickly realized (also because we attended an AI hackathon) that the maturity of available tools is not yet good enough. But, the added value is obvious — LLMs can help generate a lot of boilerplate code so that engineers can focus on more interesting problems.

We decided to laser-focus on very specific opportunities:

- Help engineers to onboard to analytics: Generate SQL to transform various DB models to models ready for analytics.

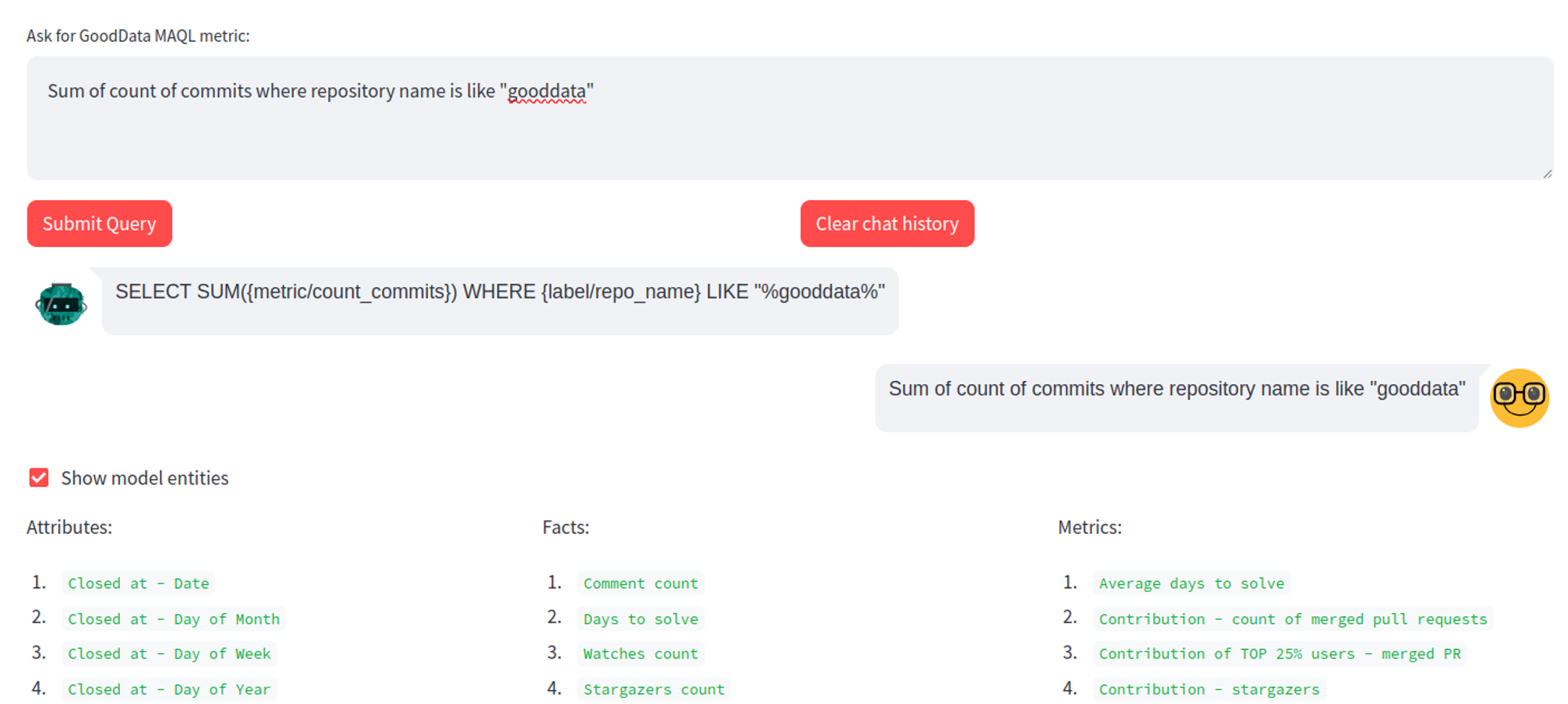

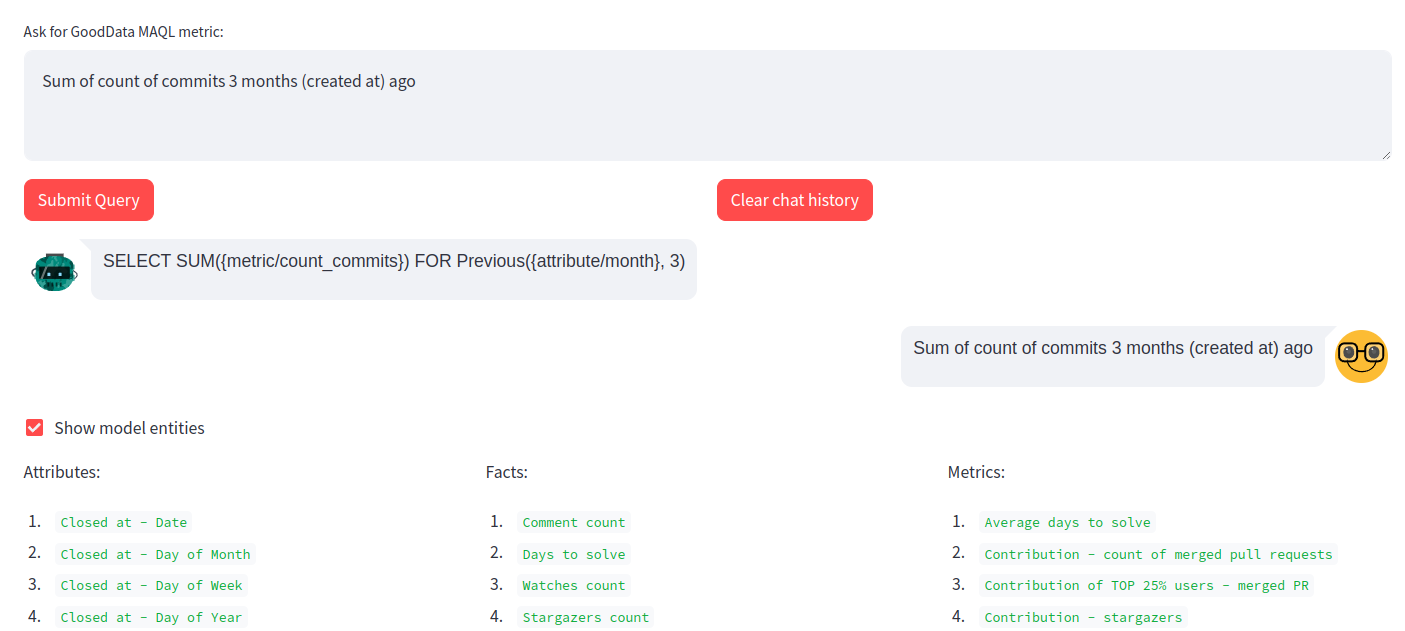

- Help engineers onboard to our specific analytics language called MAQL: Generate MAQL metrics from natural language.

Why did we prioritize it?

- It is one of the most requested use cases by the engineers.

- It would significantly help to onboard to analytics — companies often struggle to start with analytics because their data are not in good shape or because they are not willing to learn a new language (besides SQL)

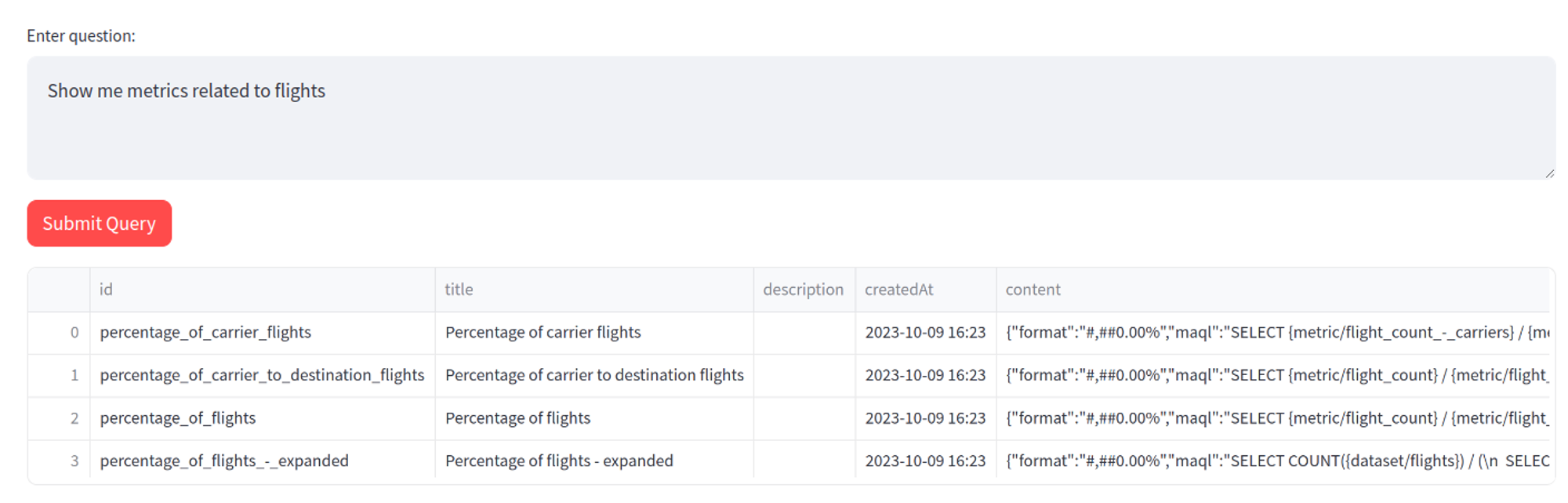

Talk to API

We follow the API-first approach. We believe that providing a good set of APIs (and corresponding SDKs) is the right way to open your platform to developers, so they:

- Can implement any custom feature without waiting for the vendor.

- Can interact with the platform programmatically.

- Can easily integrate with any other platform.

If you want to provide a good API space, you usually end up creating OpenAPI specifications. The key is to document your APIs well so developers onboard quickly.

Example questions:

- How many metrics do we have in which title contains “order”?

- Register a new Snowflake data source. The account is “GOODDATA“, the warehouse name is “TEST_WH“, and the DB name is “TEST”.

Why did we prioritize it?

- Our platform is API-first, and we believe in this approach.

- We see even business people working with our APIs using e.g. Postman.

Giving them a natural language interface as an alternative should help them.

Talk to Documentation

This is quite an obvious opportunity. Your specific documentation is nothing more than yet another set of web pages that can be ingested into LLM. Then, users can ask questions and get domain-specific answers. Remember, both questions and answers can be in almost any language!

Why did we prioritize it?

- It would significantly help to onboard our product.

- We store our documentation as a code, so the ingestion to LLMs should be quite easy.

Results

Generally, we proved that we can operate LLM on-premise. This is very important, especially because of compliance — we need to train (fine-tune) LLMs with proprietary/sensitive data. All prototypes are stored in the following open-source Github repository. The umbrella Streamlit application is publicly available here.

The most important is to learn how to:

- Fine-tune LLM

- The structure of the data for training matters a lot

- Generate a series of Q/A, provide even wrong answers, etc.

- Label the training data

- It de-prioritizes the noise coming from the base LLM models

- Use prompts

- The context for each question helps LLM to be more accurate.

It is better to train once than send large prompts with every question because of performance and costs. Training/prompting LLM is similar to teaching small kids.

Once we learned to fine-tune efficiently and prompt LLMs, we started building MVP apps for the prioritized opportunities. For simplicity(PoC), we:

- Embedded all agents into a small Streamlit app.

- Decided to use OpenAI service using their SDK.

- Using prompting instead of fine-tuning.

Separately, we proved that we can connect to on-premise LLMs and fine-tune LLMs with our custom data (and reduce the size of prompts).

Developer experience

We provide AI agents as an interface in all cases so developers can use them seamlessly. For example:

def execute_report(workspace_id: str):

agent = ReportAgent(workspace_id)

query = st.text_area("Enter question:")

if st.button("Submit Query", type="primary"):

if query:

answer = agent.ask(query)

df, attributes, metrics = agent.execute_report(workspace_id, answer)

st.dataframe(df)Talk to Data

It is incredible how flexible the underlying LLM is — you can use any language, you can use various synonyms, you can write typos, and still, the results are very accurate. There are edge cases when LLM provides the wrong answer. Unsurprisingly, even the most accurate services on the market warn you regarding this. IMHO, this experience could supplement existing user interfaces such as drag-and-drop report builders.

I implemented an AI agent, which:

- Collects semantic model from GoodData using its SDK.

- Generates the corresponding prompt. In this case, we utilized the new OpenAI function calling capability to help generate GoodData Report Definition for each natural language question.

- Executes report and collects the result in the Pandas data frame using the GoodData Pandas library.

- Returns the result to the Streamlit app to visualize it.

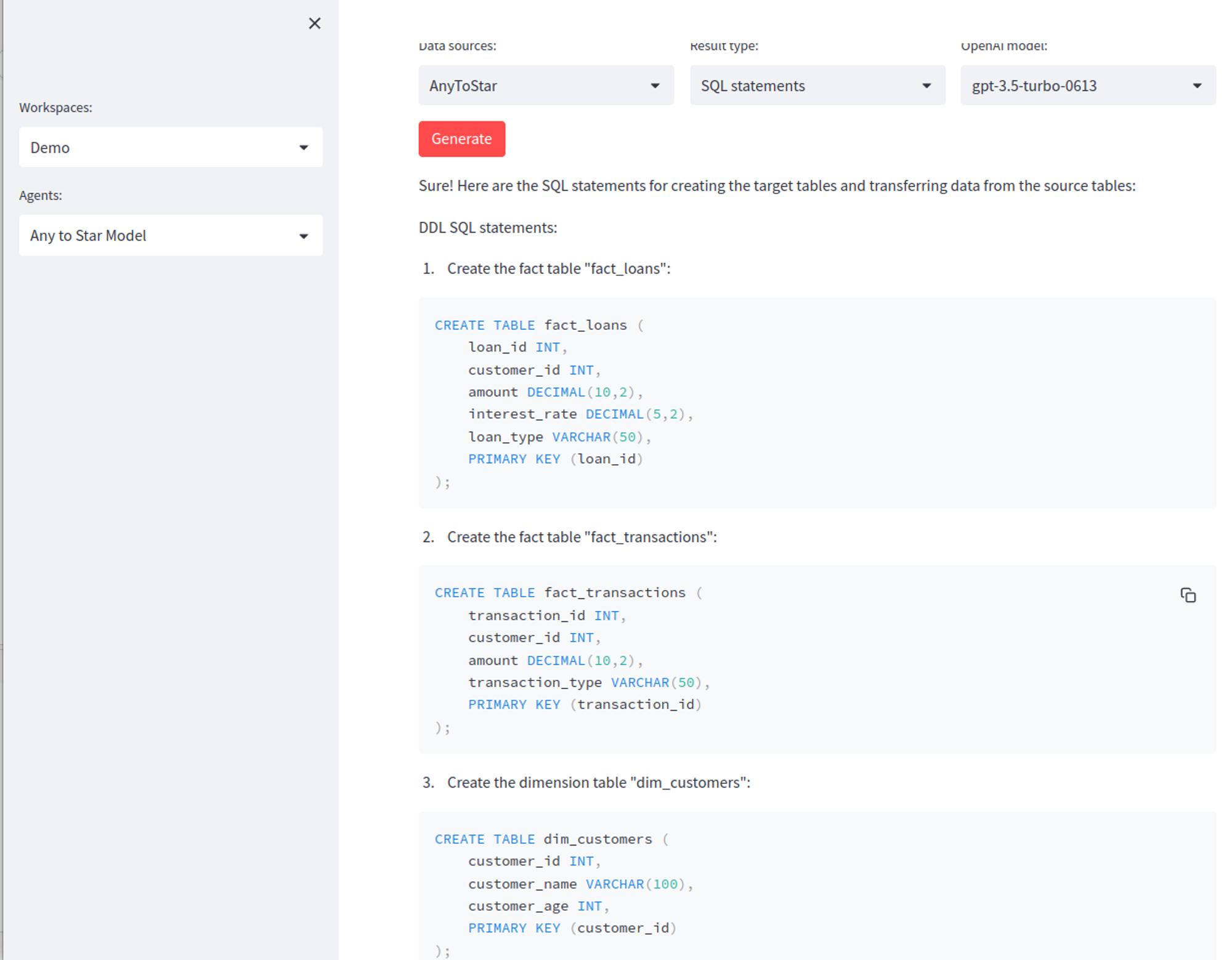

Source Code Generation (SQL)

Generate SQL transforming an existing data model. Correctly generates relevant dimensional tables, into which it injects related attributes. This is crucial for being able to create a good analytics experience on top of data models. Without shared dimensions, you cannot join fact tables and provide correct analytics results.

I implemented an AI agent, which:

- Scans the selected data source for table metadata.

- Generates prompt describing the source model (tables, columns) and requested result type (SQL, dbt models).

- Ask a question to LLM (with the prompt).

- Returns the result as a markdown to the Streamlit app.

This is a good example of how LLM can generate a lot of boilerplate code for you. It could be easily integrated with Github, creating pull requests, and code reviews by humans would still be required, obviously.

Another interesting idea is to generate a GoodData semantic model from the transformed database model. In this case, having a clean table/column naming is an important competitive advantage. Another option is to utilize the already existing dbt models containing semantic properties.

Source Code Generation (MAQL)

I wanted to prove that it’s feasible to teach LLMs the basics of our custom MAQL language on a few use cases. First, LLM must understand the concept of our Logical Data Model(LDM) decoupling the physical world (database relational models) from the analytics (metrics, dashboards, etc). Then, I taught LLM basic MAQL syntax plus one advanced use case.

Talk to API

List data sources registered in GoodData. I implemented an AI agent, which:

- Reads GoodData OpenAPI specification.

- Generates prompt describing existing — APIs. Utilizes documentation provided in the OpenAPI specification.

- Calls API, which corresponds to the user question.

- Transforms result to Pandas data frame and returns it to the Streamlit app.

- Users can ask to filter the output.

There Are Also Bad and Ugly

Nothing is clean and shiny. No surprise — the area is evolving so fast, and we are still in the very early-stage phase. My most important concerns:

- Incorrect answers from LLM, so-called hallucinations.

- Developer tooling can often break (breaking changes, dependencies, etc.).

- Performance & cost.

But, there are either existing or soon-expected solutions for these concerns. Also, do not forget that I am not an expert in AI. I just spent a few days with the discovery and implementation of proof-of-concepts. I know that if I spend more time with it, it can be done much better.

The Best Way to Artificial Business Intelligence

After our discoveries, we learned that one of the best ways to ABI (Artificial Business Intelligence) is to use LLMs in order to help our prospects and customers onboard very quickly to our product because of things like Talk to Documentation, and Source Code Generation. Prospects will not spend hours studying materials, instead, they can just ask. Also, LLMs increase productivity, and we already proved it in our closed beta, where we implemented most of the things described in this article. If you are interested in more details, check the article How to Build Data Analytics Using LLMs in Under 5 Minutes.

Lastly, we are still in the early stages of this dramatic shift. We would love to hear your opinions about the future. Let’s discuss it on community Slack! If you want to try GoodData, you can check out our free trial.

Why not try our 30-day free trial?

Fully managed, API-first analytics platform. Get instant access — no installation or credit card required.

Get startedWritten by Jan Soubusta |