Data Warehouse, Data Lake, and Analytics Lake: A Detailed Comparison

Summary

This article compares three main approaches to managing data: the data warehouse, the data lake, and the analytics lake. A data warehouse stores structured data for reporting and business intelligence. A data lake holds raw and unstructured data for flexible storage and advanced analytics. An analytics lake combines both, offering a unified environment where raw data, analytics tools, and models can be used together to create and share insights.

Data Warehouse vs Data Lake vs Analytics Lake: Key Differences

Every application generates real-time data, which is stored in its database. This data, whether current or historical, helps businesses evaluate performance and make informed decisions. To manage and analyze it, companies use Data Warehouses, Data Lakes, and Analytics Lakes, either separately or together for effective data integration.

In most organizations, Data Warehouses are used primarily for long-term storage and consolidation purposes, supporting reporting, analytics, and other consolidated data needs. In contrast, Data Lakes handle raw, unstructured data suitable for machine learning, but this can be costly. Analytics Lakes are designed to optimize storage and compute resources for analytics and ML, but they are not a good fit for long-term data storage as data warehouses.

The key differences between a Data Warehouse, Data Lake, and Analytics lake are:

| Parameters | Data Warehouse | Data Lake | Analytics Lake |

|---|---|---|---|

| Purpose | Optimized for analytics and reporting | Designed to process raw data to support ML | Analytics and machine learning |

| Format and optimizations | Structured data optimized for fast query performance | Optimized for large-scale data — raw data | Primarily optimized for analytical applications and data product development |

| Users | Data or business analysts, consumers | Data scientists, data engineers | Data scientists/engineers, analytics engineers |

| BI support | Full support | Minimal | Integrated BI and visualization environment |

| Optimized for data science and ML use cases | Limited support | Support via big data tools | Specifically tailored for analytics and ML |

| Security and governance | Strong, built-in security with role-based access controls, auditing, and compliance features | Less secure due to size and lack of selectivity, often requires extra tools for governance and security | Robust governance through metadata integration |

| Costs | Predictable costs with a fixed schema, but high storage costs for direct query access in a cloud data warehouse | Low storage costs due to the absence of a fixed schema, but unpredictable analytics costs | Optimized computations and APIs to reduce overall costs |

Now let's explore each of the technologies in more depth.

What Is a Data Warehouse?

A Data Warehouse is a type of data management system that centralizes and consolidates large amounts of data from multiple sources. It acts as a unified repository, where a heterogeneous collection of data sources is organized under a single schema. This supports data analysis, data mining, artificial intelligence (AI), and machine learning, which help with data-driven decision-making.

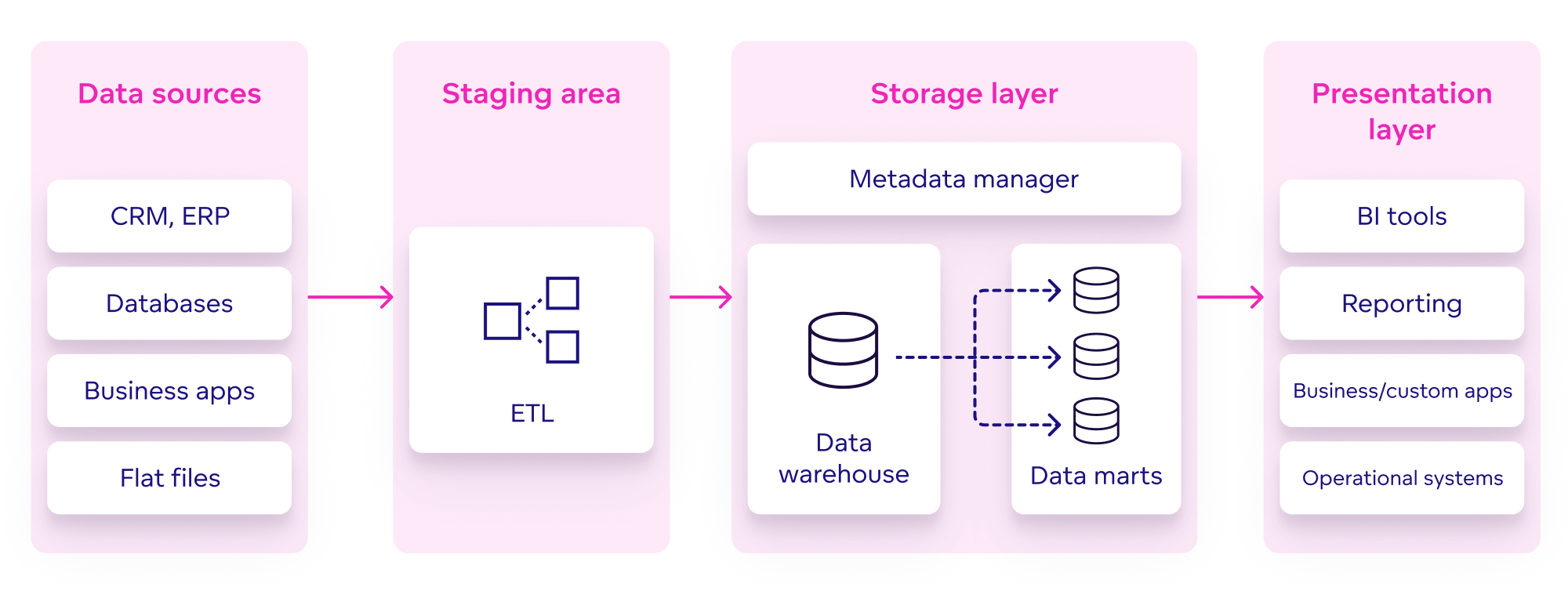

Data Warehouse Architecture

A typical Data Warehouse architecture includes several key components:

- Structured data comes from external data sources, each with its own structure. It includes data from various applications, each maintaining its own relational database for current data. However, companies need a separate solution to store historical data.

- In the staging layer, data is extracted, transformed, and loaded (ETL) after identifying all data sources. This process combines data sources into a consistent data model schema through cleansing operations like selecting columns, translating values, joining, sorting, and finally loading into the Data Warehouse.

- The storage layer includes metadata on the content of the Data Warehouse, such as location, structure, and date added. It consists of a centralized data warehouse for the enterprise and optional data marts, which focus on specific subjects and streamline querying and reporting.

- The presentation layer defines how data is used and presented, including BI tools and applications for analyzing and reporting data, generating reports for various business needs, applications for business operations, and data mining to extract patterns and knowledge from large data sets.

Data Warehouse architecture

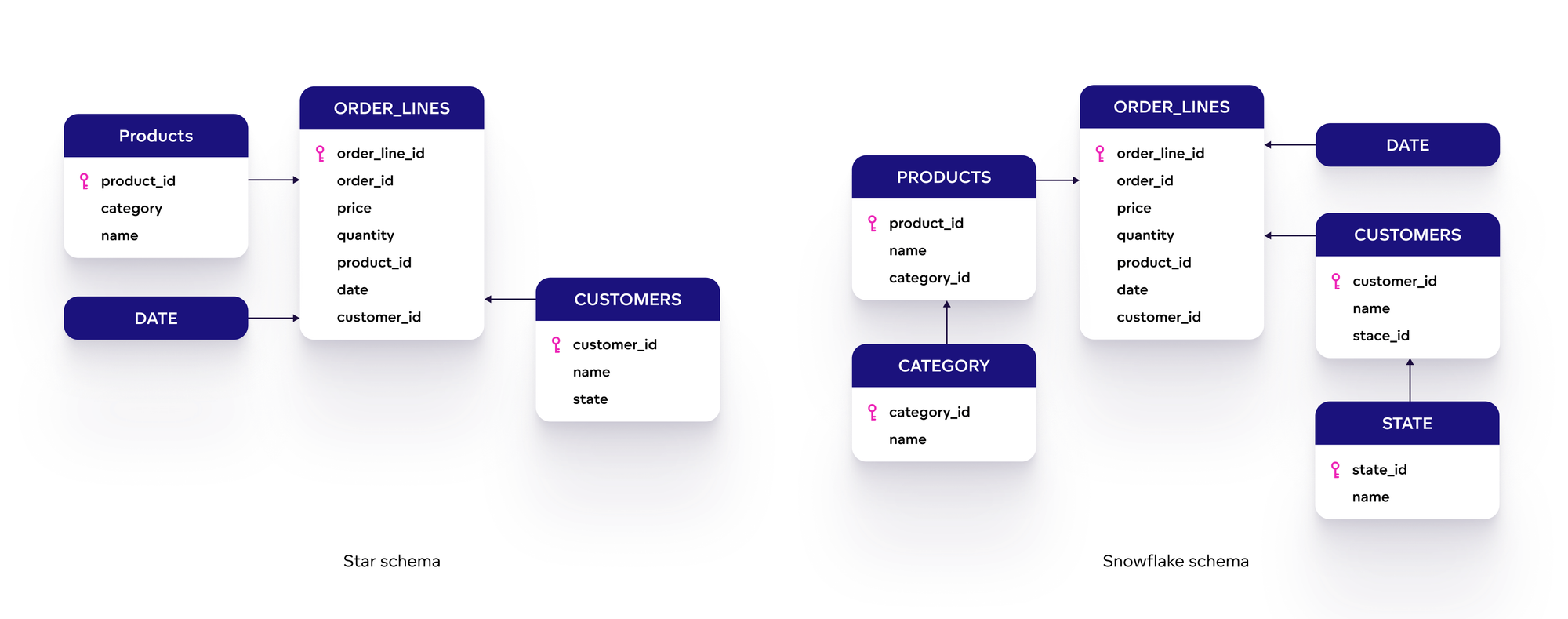

In a Data Warehouse solution, data is structured into a dimensional or multidimensional data model with tables for facts and dimensions. Common schemas include the star and snowflake schemas; connecting multiple snowflake schemas forms a galaxy schema. To learn more, read our article on Relational and Dimensional Data Models.

Examples of a star schema and snowflake schema

Data Warehouse Examples

Data Warehouses are ideal for storing large amounts of historical data and performing in-depth analysis to generate business intelligence. Their highly structured nature makes data analysis straightforward for business analysts and data scientists.

Data Warehouses can be either on-premise or cloud-based. Examples include Snowflake, Amazon Redshift, Clickhouse, MotherDuck, and many more.

What Is a Data Lake?

In contrast to a Data Warehouse, a Data Lake stores all of an organization's data — both structured and unstructured. It acts as comprehensive storage to handle large volumes of data in raw format. Organizations use Data Lakes to build data pipelines, making the data available for any analytics tool to create insights and support better decision-making.

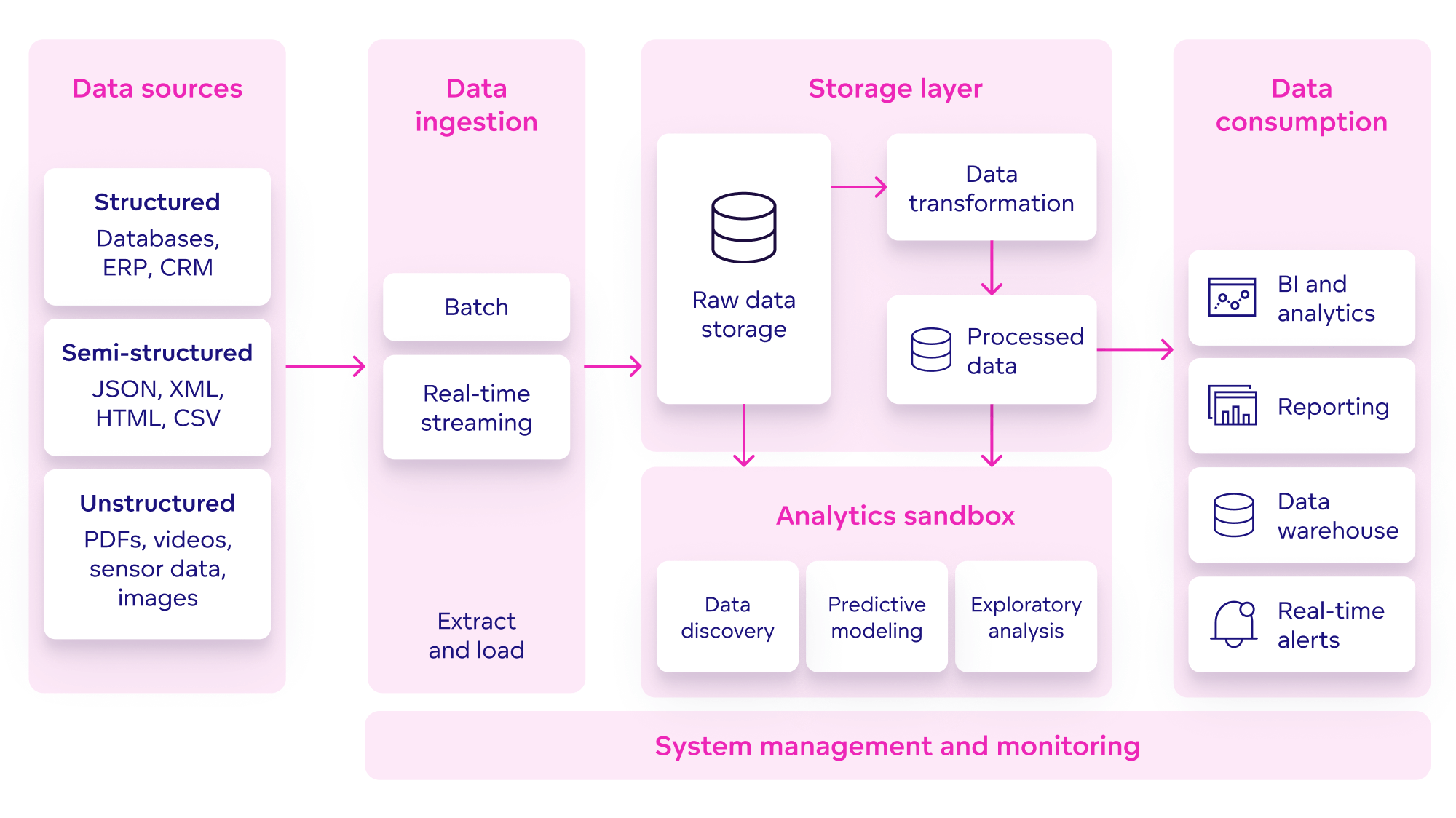

Data Lake Architecture

Data Lake architecture is a framework or structure for having a central repository that contains raw data without any structure (unlike in Data Warehouses).

Storage and compute resources can be on-premises, in the cloud, or hybrid. A unified Data Lake architecture includes:

- Data sources for a Data Lake include structured data from ERP systems, CRMs, and relational databases; semi-structured data like JSON, XML, CSV, and HTML; and unstructured data such as sensor data, PDFs, and videos.

- Data ingestion is the process of importing data into the Data Lake, either in batch mode or real-time. Batch ingestion transfers large data chunks at intervals, while real-time ingestion continuously brings in data as it's generated.

- The data storage and processing layer is where data is ingested and stored for the next transformation process. This layer is divided into ‘zones’: the raw zone contains original data, the transformed data zone includes data with basic transformations, and the processed data zone houses refined data ready for analysis.

- Analytical sandboxes are isolated environments within the Data Lake for discovery, machine learning, and analysis. They keep experiments separate from main data layers to maintain data integrity and allow free experimentation.

- Data consumption: Processed data is used for BI tools (like GoodData), reporting, moving to Data Warehouses, and real-time alerting.

Data Lake architecture

Data Lake Examples

Data Lakes are not designed to meet an application’s transaction and concurrency needs. Instead, they provide flexible and scalable storage and compute capabilities, either independently or together. Examples include AWS S3, Azure Data Lake Storage Gen2, and Apache Hadoop for storage, and technologies like MongoDB Atlas Data Lake or AWS Athena for organizing and querying data.

What Is an Analytics Lake?

An Analytics Lake is a data platform that consolidates raw and transformed data, data science models, metadata, and front-end tools. By bringing all analytics assets into one location, it makes data, insights, and tools accessible and usable for both human and automated data consumers.

Analytics Lake Architecture

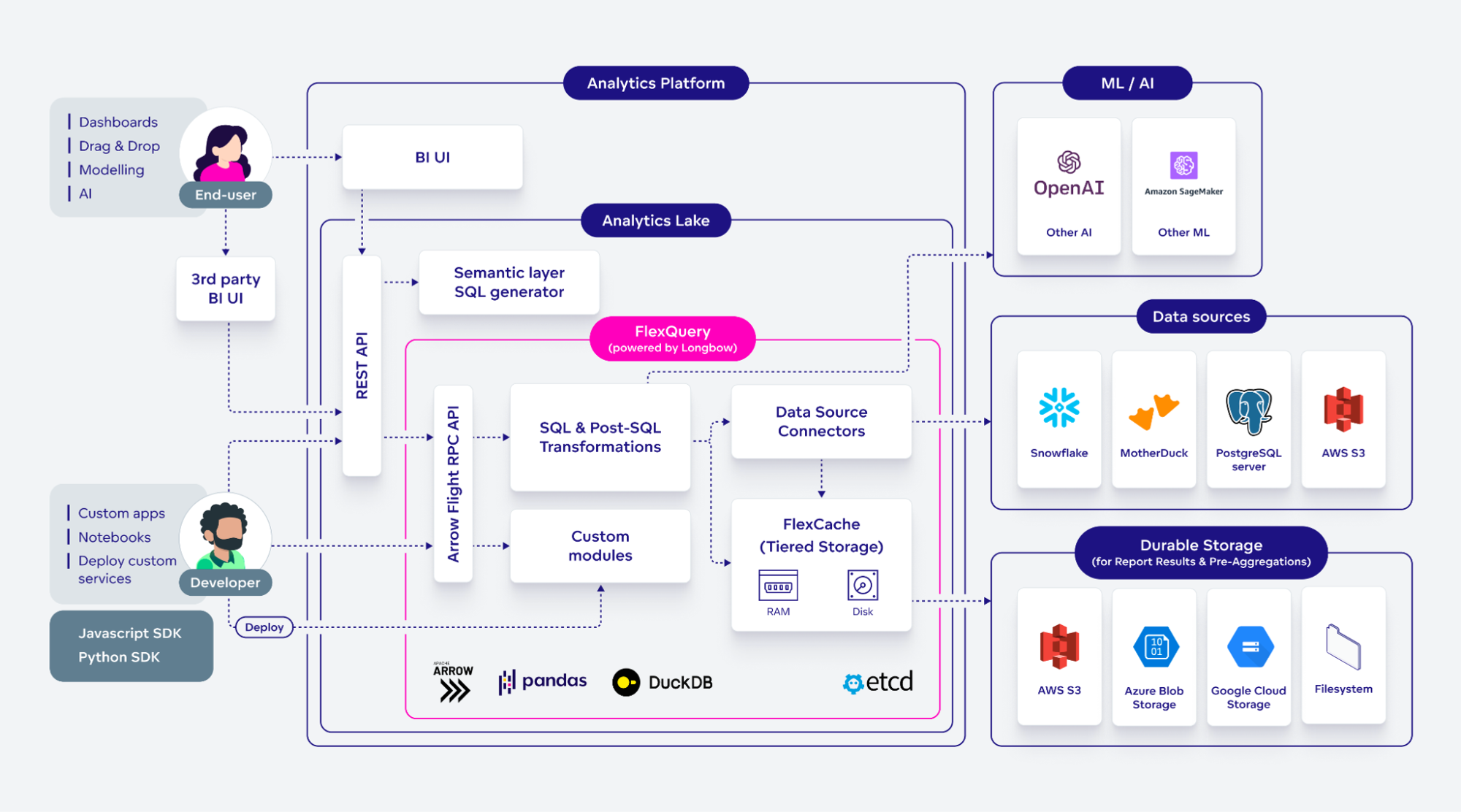

An Analytics Lake provides a composable data service architecture that combines open-source technologies, a semantic layer, and analytics. It serves both business consumers and developers by offering a comprehensive solution for managing and utilizing the company's analytics assets.

The GoodData Analytics Lake consists of:

- FlexQuery, which uses the Apache Arrow framework for consistent data service development and management. Robust APIs support standard practices like CI/CD and code-based automation, integrating with many Python libraries and tools. This ensures seamless incorporation into existing development pipelines.

- Flight RPC API simplifies data access and transfer, enabling smooth interactions between data producers and consumers. It supports querying various data sources, including Data Warehouses and Data Lakes, and allows for hot swapping to reduce integration challenges. It also uses dataframe operations for post-processing and caching services to store pre-aggregated results for faster performance and reduced Data Warehouse costs.

- Within the Analytics Lake, metadata modeling and a headless semantic layer provide a unified view of data, enhancing data discoverability and usability while maintaining integrity. The integration between FlexQuery and this layer enables data reuse across dashboards, Python and React applications, and even competitor products.

- The analytics platform built on the Analytics Lake offers a no-code/low-code analytics environment. It provides data distribution and data product solutions that go far beyond typical dashboards and reports. These include AI agents, direct query options, data feeds, API integrations, and more. This simplifies data interpretation for decision-makers and stakeholders, supporting both standardized reporting and innovative exploration.

GoodData Analytics Lake architecture

Analytics Lake Examples

The Analytics Lake is optimized for various workflows and processes. It serves as a repository for data, transformations, analytics models, visualizations, and descriptive metadata. This consolidation enables AI services to discover and retrieve any component as needed to generate insights. GoodData has introduced this new architecture concept, and provides tools for data visualization, embedded analytics, and comprehensive BI solutions that cater to a wide range of solutions – including software, e-commerce, financial services, and healthcare.

To learn more about the Analytics Lake, check out these articles: Building a Modern Data Service Layer with Apache Arrow, Project Longbow, Arrow Flight RPC 101, or read our whitepaper about The Analytics Lake.

Data Warehouse vs Data Lake vs Analytics Lake: Benefits and Limitations

This table highlights the benefits and drawbacks of each technology to help you choose the most suitable data management solution.

Data Warehouse vs Data Lake vs Analytics Lake: Benefits and Limitations

This table highlights the benefits and drawbacks of each technology to help you choose the most suitable data management solution.

| Pros | Cons | |

|---|---|---|

| Data Warehouse | Centralized data management: Consolidates data from multiple sources into a single source of truth. Improved data quality: Data is cleaned, validated, and consistently formatted for accurate analysis. Data security and compliance: Implements robust security and compliance protocols to protect sensitive data. | Limited flexibility and scalability: Requires pre-processing, unsuitable for large volumes of unstructured data. High operational costs: Expensive to maintain and scale due to storage and compute costs. Performance degradation: Massive SQL queries can slow down response times. Limited advanced analytics: Primarily designed for BI and reporting. |

| Data Lake | Flexibility and scalability: Handles vast amounts of diverse data, making it highly scalable. Advanced analytics support: Suitable for advanced analytics, ML, and real-time processing. Improved ingestion and accessibility: Quick data ingestion and immediate access for analysis without extensive processing. | Governance and data quality: Hard to maintain due to vast, raw data. Unpredictable costs: Complex data management makes costs hard to predict. Missing semantic layer: Limits data discoverability and usability. Reusable data: Minimal BI support hinders data reuse for reporting. |

| Analytics Lake | Enhanced flexibility and scalability: Uses schema-on-read for raw data storage, simplifying scaling and handling diverse data types. Cost efficiency: Lowers operational costs and TCO by combining storage and analytics, supporting complex workflows. Optimized performance: High-performance caching and optimized query processing for efficient, real-time data handling. Support for advanced analytics and ML: Integrates them directly into its architectures, provides robust APIs and supports standard engineering practices. Improved data governance and quality: Integrated metadata modeling and headless semantic layer ensure high data standards. | Emerging concept and architecture: Still developing and not yet fully mature. Requires skilled personnel: Trained personnel are necessary to fully leverage its capabilities. Proprietary Analytics Lakes: Can have tightly coupled data and analytics tools, limiting flexibility. |

Data Warehouse vs Data Lake vs Analytics Lake: Which Is the Best Fit for You?

Most large organizations use both Data Lakes and Data Warehouses, with data first ingested into a lake and then moved to warehouses. The new Analytics Lake offers additional advantages, thus organizations should consider using all three, as each serves a distinct purpose.

Analytics Lakes combine data, metadata, models, metrics, and reports into one platform, simplifying analytics application creation and governance. Thanks to the semantic layer, each user can access the analytics lake through their preferred interface — Python for data scientists, React for application developers, SQL for data engineers, and no-code/low-code interfaces for business users.

Data Warehouses are perfect for businesses with structured data and traditional BI needs. They provide strong security, compliance, and predictable costs.

Data Lakes are ideal for companies with large amounts of raw data, allowing quick ingestion and immediate access for advanced analytics and machine learning.

Next steps with GoodData

Already have a Data Warehouse or Data Lake set up but need an AI-driven analytics and BI tool? Sign up for a free trial to explore GoodData's capabilities. Interested in how an Analytics Lake would fit into your current solution? Request a demo and talk to our team.

FAQs About Data Warehouses, Data Lakes, and Analytics Lakes

The decision should be based on business goals rather than just technology. If reporting and compliance are the main priorities, a warehouse is usually best. If innovation and experimentation with raw data are needed, a lake is more suitable. For organizations that want both, an analytics lake may provide a balanced approach.

A warehouse typically requires SQL and business intelligence expertise, while a lake demands skills in data engineering, metadata management, and often programming for machine learning. An analytics lake requires a mix of both skill sets.

A frequent mistake is storing everything without a clear plan for access or quality. Another issue is failing to catalog datasets, which leads to wasted time searching for information and risks creating inconsistent results.

Cloud native services are making all three more accessible. Automation, AI assisted data management, and tighter integration with business applications are pushing platforms toward unified analytics environments that resemble the analytics lake model.

A warehouse enforces rules before data is loaded, so the information is already structured and clean. A lake accepts data in any form, which shifts the responsibility for governance and quality checks to later in the process.

Smaller companies often lack resources to maintain both a warehouse and a lake. An analytics lake combines their strengths into one platform, lowering costs and simplifying operations while still supporting growth.