Data Integration: Definition, Types, and Use-Cases

Written by Sandra Suszterova |

Table of Contents

What is data integration?

Data integration involves merging various data types — structured and unstructured — from multiple sources into a single, consistent dataset. This process includes critical steps such as extracting, transforming, and loading the data into a unified system to create an easy-to-use format. The purpose is to provide a unified view that simplifies access and analysis, ensuring all data is up-to-date and accessible, supporting informed decision-making across the organization.

Data integration solution

Data integration effectively creates connections among diverse data sources, merging them into a unified system to boost decision-making. On the other hand, data migration involves shifting data from one location to another to enhance performance and security.

Data integration benefits

Why is data integration important? Let's take a deeper look at data integration benefits:

- Enhances collaboration: Provides access to essential and newly generated data, streamlining business processes and reducing manual tasks.

- Saves time: Automates data preparation and analysis, eliminating hours of manual data gathering.

- Improves data quality: Implements precise cleansing like profiling and validation, ensuring reliable data for confident decision-making and simplifying quality control.

- Boosts data security: Consolidates data in one location, enhancing protection with access controls, encryption, and authentication through modern integration software.

- Supports flexibility: Allows organizations to use a variety of tools at different stages of the integration process, promoting openness and adaptability in their data management systems.

Types of data integration

There are multiple data integration techniques available that can be used to create a unified system.

ETL

ETL (Extract, Transform, Load) is a widely used data pipeline process that converts raw data into a unified dataset for business purposes. The process begins by extracting data from multiple sources such as databases, applications, and files. Then, data is transformed through various cleansing operations (selecting specific columns, translating values, joining, sorting, and ordering) in the staging area. Finally, this data is loaded into a data warehouse.

ELT

ELT (Extract, Load, Transform), compared to ETL, is a data pipeline without the staging area. Data is immediately loaded and transformed into a cloud-based system. This technique is more likely fit for large data sets for quick processing with a better fit for data lakes. For extraction, you can integrate with Meltano, and for transformation, you can use dbt.

Data streaming

Data streaming technology allows data to be processed in real time as it flows continuously from one source to another. This enables immediate analysis and decision-making without waiting for all data to be collected first.

Application integration

Application integration connects different software applications within or across companies, enabling seamless data synchronization and functionality across disparate systems.

An example of application integration is integrating GoodData analytics with Slack, allowing data access and analysis through conversational interfaces. This process uses Python SDKs to enable ChatGPT to handle data queries, demonstrating its adaptability to various SQL dialects and enhancing user interaction in business intelligence.

API data integration

API data integration can be considered a subset of application integration. While data integration generally focuses on combining data from different sources into a single, coherent dataset, API integration explicitly facilitates this process by enabling systems to communicate and share data directly through APIs.

For instance, in a business environment, APIs can help integrate customer data from a CRM system with sales data from an e-commerce platform, allowing for more comprehensive analytics and better business insights.

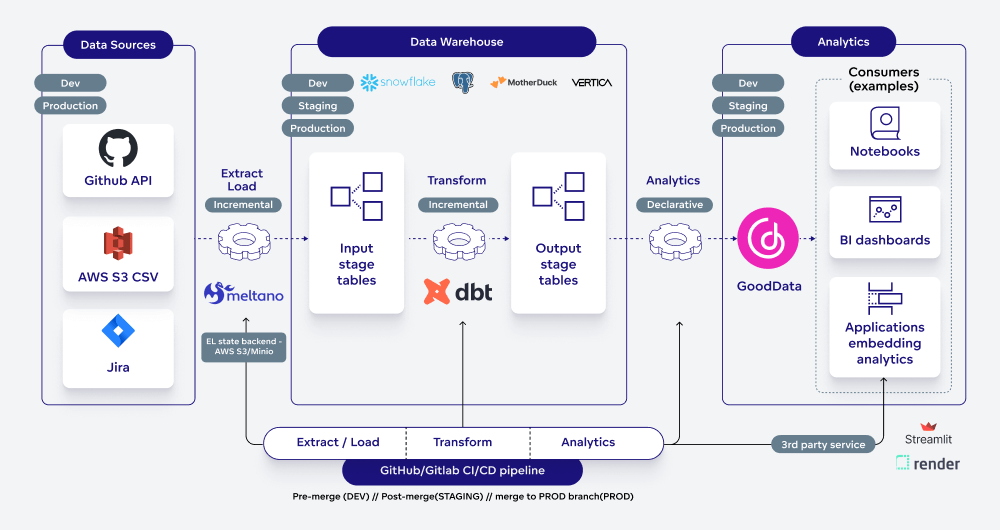

Data integration architecture

Data integration architecture is crucial in modern IT systems, facilitating seamless data flow across different systems to eliminate data silos and optimize data utility. The data integration platform details the journey of data from its origin in source systems to its ultimate use in business intelligence platforms. It specifies how data is collected from diverse sources, stored in data warehouses or lakes, and transformed into actionable insights for business analysis.

The following image represents a data integration architecture blueprint outlining the process from data extraction to analytics. It details the flow from various data sources through an ETL pipeline, leading to data warehousing and subsequent analytics.

Data integration architecture example

Data integration components

Data integration components are the essential parts of a system that work together to combine data from different sources into a single, unified view. The primary data integration components are:

- Data sources represent various sources of raw data — databases, ERP, CRM or SCM systems, flat files, and external services — all of which contribute essential data for analyzing and processing.

- Automated data pipelines are essential for automating the data flow through the data integration process. They ensure data moves efficiently, reducing the likelihood of errors and maintaining data integrity.

- Data storage solutions consist of data stored in a structured data warehouse for quick retrieval or a data lake that stores raw, unstructured data, impacting the speed and flexibility of data analysis. Data storage examples can be Snowflake, Postgre, Vertica, MotherDuck, and more.

- Data transformation involves the process of converting raw data into a format usable for analytics and reporting, often utilizing tools like dbt.

- Analytics and Business Intelligence platforms: consume the transformed data for analysis and visualization. Data becomes a valuable resource at this point, offering insights that inform and drive business decisions.

Data integration tools

Data integration tools of various vendors facilitate the flow of data from source to analytics platforms. These tools support processes such as ETL/ELT pipelines and data transformation, offering businesses a comprehensive range of data integration software to accommodate their specific needs.

Extract and load

Various providers offer specialized tools to manage data integration within CI/CD pipelines (keeping data integration workflows reliable and efficient, minimizing errors, and enabling concurrent operations without conflict). For example, Meltano is an open-source tool that simplifies the data lifecycle by automating the extraction and loading stages, managing workflow orchestration and ELT processes for efficient data transfer to the data warehouse. Another example of an ETL data integration tool is Coupler.io.

Transform

In the data warehouse staging area, dbt can handle the transformation phase, using SQL queries to reshape data, which is then scheduled for execution and stored in output tables. Integration with dbt can vary, from complete use of dbt Cloud to adapting BI tools to work with dbt's transformed models.

Store

Another tool that enhances data integration solutions is the data warehouse, which can be integrated into a data ecosystem for storing, managing, and analyzing large amounts of data. Examples may include:

- Snowflake integration: Snowflake, a cloud-based platform, streamlines the integration of structured and semi-structured data, automating data pipelines and enhancing collaboration.

- AWS Redshift integration: Redshift integrates seamlessly within the AWS ecosystem, facilitating agile data management and analytics through direct SQL connections and a variety of BI tools.

- Clickhouse integration: ClickHouse excels in OLAP with its open-source, column-oriented design, supporting real-time queries, bulk imports, streaming, and API integration.

- MotherDuck integration: MotherDuck enhances DuckDB with cloud capabilities, offering scalable data management, a user-friendly interface, and support for hybrid queries and transformations.

Analyze

In the analytics phase, platforms like GoodData are key in performing declarative analytics on processed data. GoodData enhances data visualizations and analytics experiences by incorporating real-time analytics, AI, and machine learning, enabling dynamic and insightful analysis across data sets.

Data warehousing solutions to connect to GoodData

Data integration use cases

Data integration is essential for organizations across various industries to consolidate data from multiple sources, streamline operations, enhance decision-making, and improve customer experiences.

Data integration has transformative impacts across various sectors, each adapting the technology to suit specific needs. For instance, in e-commerce, data integration consolidates customer, inventory, and supplier data to enhance online shopping experiences, streamline fulfilment processes, and improve inventory management. Similarly, marketing aggregates data from diverse sources like social media, CRM systems, and market research to tailor marketing strategies and measure their effectiveness. Integrating clinical, laboratory, and insurance data in healthcare improves patient care and operational efficiency.

These examples show how crucial data integration is for maintaining a unified, accurate view of business operations, especially in the B2B market. It supports critical data exchanges between partners, ensuring smooth and consistent operations in supply chains and sales, which is key for staying competitive in today's data-driven environment.

Data integration challenges

In light of the numerous data integration solutions and tools tailored for various industries, there are a few possible data integration challenges:

- Data security involves securing sensitive information from unauthorized access or breaches while transferring between systems.

- Data compliance requires adherence to various regulations, such as GDPR or HIPAA, which dictate how data should be handled and protected.

- Scalability issues may arise as data volume increases, with the infrastructure's capacity to handle large-scale data without performance degradation becoming a challenge.

- Diverse data sources can add complexities due to varying formats, structures, and standards across systems, making uniform data processing difficult.

Data integration best practices

To effectively address data integration challenges, adopt best practices that combine traditional methods with emerging trends like cloud-based solutions, AI, scalability, and more:

- A clear data governance framework is essential in data integration, providing guidelines and protocols to manage data effectively and ensure it meets compliance and quality standards.

- Selecting the proper integration tools and platforms is crucial for aligning with the organization's specific needs and technology stack, enhancing efficiency and compatibility in data integration processes.

- Cloud-based data integration solutions offer scalable, flexible, and cost-effective options for managing data across various cloud environments, facilitating more accessible and dynamic data handling.

- Ensuring scalability and flexibility in integration architecture allows organizations to adapt to changing data volumes and requirements, preventing bottlenecks and supporting growth.

- The rise of real-time data integration enables organizations to process and analyze data instantly, providing timely insights and enabling faster decision-making.

- Integrating AI and machine learning into data integration processes can significantly enhance the automation of data handling, improve data quality, and uncover deeper insights through advanced analytics.

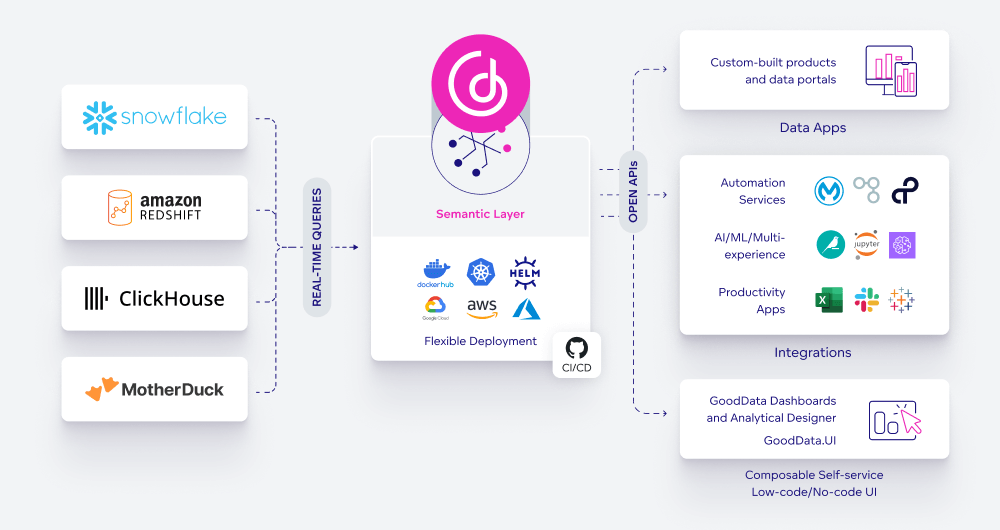

How to integrate with GoodData?

GoodData's architecture eases integration and allows businesses to use their existing data tools and infrastructure, fostering a more flexible and adaptable analytics environment.

GoodData's flexible architecture offers significant advantages over platforms like Qlik — which relies on its specific Cloud Data Integration tool — by supporting ETL tools for more diverse technological integration. This openness fosters a broader range of data strategies and richer insights. For instance, users can employ Meltano for data extraction and loading and dbt for transformation. GoodData further improves these processes through integration blueprints such as Data Pipelines as Code, simplifying connectivity to the platform.

GoodData's flexibility enhances analytics integration across applications. It supports open APIs and Headless BI, allowing the use of its semantic layers and metrics stores in various tools, complemented by seamless notebook integrations.

Next steps with GoodData

Curious about unlocking the potential of data integration with GoodData? Dive into our blueprints to discover how GoodData can transform your data landscape! Get a free GoodData trial for some first-hand experience, or, if you want to ask questions and see how GoodData fits into your solution, request a demo for a platform walkthrough.

Written by Sandra Suszterova |